Recent advancements in large language models (LLMs) have reshaped how software development is approached, but as researchers from the University of Texas at San Antonio (UTSA) unveil, these models also come with significant risks. The UTSA study, led by doctoral student Joe Spracklen, focuses on the phenomenon of “package hallucinations”—a term that describes a critical vulnerability where LLMs generate erroneous code that includes references to non-existent software packages. As AI becomes more integral to coding practices, understanding the implications of these hallucinations is crucial for developers and organizations alike.

Despite the groundbreaking potential of AI in coding tasks, the study shows that the use of LLMs can lead to the accidental creation of insecure software. The implications extend beyond the development environment, with potential real-world consequences that could affect the security of software systems globally. In a moment of technological sophistication, researchers found that programmers integrating AI-generated tools into their workflow contribute significantly to the development of insecure software, inadvertently introducing vulnerabilities that malicious actors could exploit.

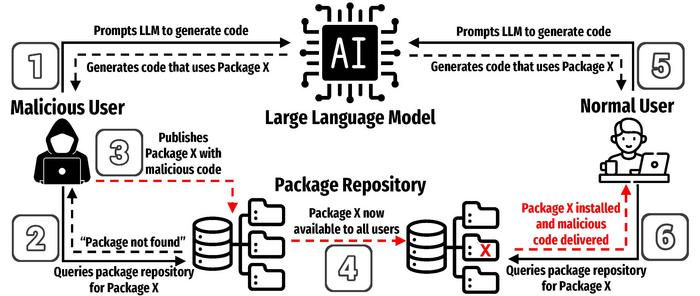

Central to the study is the concept of package hallucinations, wherein an LLM suggests or generates non-existent software libraries. Such hallucinations can lead to serious security breaches, as they can prompt developers to trust and execute code that references packages which might not be safe or, worse, created with malicious intent. This is a particularly dangerous flaw, as today’s coding practices increasingly rely on third-party libraries and packages, making software development both easier and riskier.

Spracklen and his team conducted extensive testing, evaluating the frequency of these hallucinations across various programming languages and scenarios. They discovered alarming statistics: their analysis indicated that, out of over two million code samples generated using LLMs, nearly half a million references were made to packages that do not exist. This staggering hallucination rate raises urgent questions about the security of code generated using LLMs and highlights an urgent need for developers to maintain a critical outlook when integrating AI into their workflows.

Moreover, the team’s research revealed that the level of hallucination varied significantly across different models. The study found that GPT-series models, for instance, exhibited a markedly lower hallucination rate compared to open-source models. This disparity emphasizes the necessity for continued scrutiny of AI models used in code generation, as the safety and reliability of software depend heavily on these tools’ outputs. As LLMs become ubiquitous in the realm of software development—reportedly used by up to 97% of developers—it becomes increasingly vital to address their limitations and vulnerabilities.

Package hallucinations represent a unique risk because they can be easily exploited by attackers. An adversary can recreate a trusted package under the name suggested by an LLM and inject malicious code, compromising the unsuspecting developer’s environment. This tactic is particularly insidious, as it plays on the trust developers inherently place in LLM-generated code. The proliferation of open-source software repositories, which require high levels of trust from their users, creates an ideal environment for these attacks.

The researchers advocate for heightened awareness among developers regarding the risks of package hallucination. They argue that as LLMs improve in their natural language processing capabilities, user trust will inevitably increase, leading to a greater likelihood of falling victim to these types of vulnerabilities. The notion of reliance on AI for critical coding tasks must be counterbalanced with an understanding of the associated risks, reinforcing the need for rigorous validation processes in software development.

Efforts to mitigate the risk of hallucinations include employing verification methods such as cross-referencing generated packages with a master list. However, the UTSA team underscores the fundamental need to rectify the issues at the core of LLM development to address this vulnerability effectively. They have reached out to leading AI companies, including OpenAI and Meta, sharing their findings and urging for improvements in the reliability of these models.

In summary, the UTSA research shines a light on an urgent concern within the intersection of AI and software development. As programming continues to evolve with the integration of AI, developers must remain vigilant about the security risks posed by tools they rely upon daily. This study warns against complacency in the face of technological innovation and calls for a proactive stance towards addressing the potential hazards that cross the line from useful tools to facilitators of security breaches.

The ramifications of the research extend beyond individual developers or organizations; they speak to a broader imperative for the tech community to prioritize security in the design and deployment of AI systems used in programming. Collective vigilance and a commitment to ethical coding practices can help mitigate the risks of package hallucinations and other vulnerabilities, striving for a more secure software environment.

As the findings from UTSA circulate through academic and technical circles, they pave the way for essential discussions on the future integration of AI in coding, challenging the community to rethink how we approach trust and verification in an era increasingly dominated by machine learning and automation.

Understanding and adapting to the changing landscape shaped by LLMs is no longer an option but a necessity. Developers must champion resilience against package hallucinations and ensure that their trust in AI does not come at the cost of security and integrity in software development.

Subject of Research: Package Hallucinations in Code Generating LLMs

Article Title: We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs

News Publication Date: 12-Jun-2024

Web References: USENIX Security Symposium 2025

References: [UTSA Research Study on Package Hallucinations]

Image Credits: The University of Texas at San Antonio

Keywords: Cybersecurity, Artificial Intelligence, Software Development, Machine Learning, Package Management