Human tissue is intricate, complex and, of course, three dimensional. But the thin slices of tissue that pathologists most often use to diagnose disease are two dimensional, offering only a limited glimpse at the tissue’s true complexity. There is a growing push in the field of pathology toward examining tissue in its three-dimensional form. But 3D pathology datasets can contain hundreds of times more data than their 2D counterparts, making manual examination infeasible.

Credit: Andrew H. Song, Brigham and Women’s Hospital

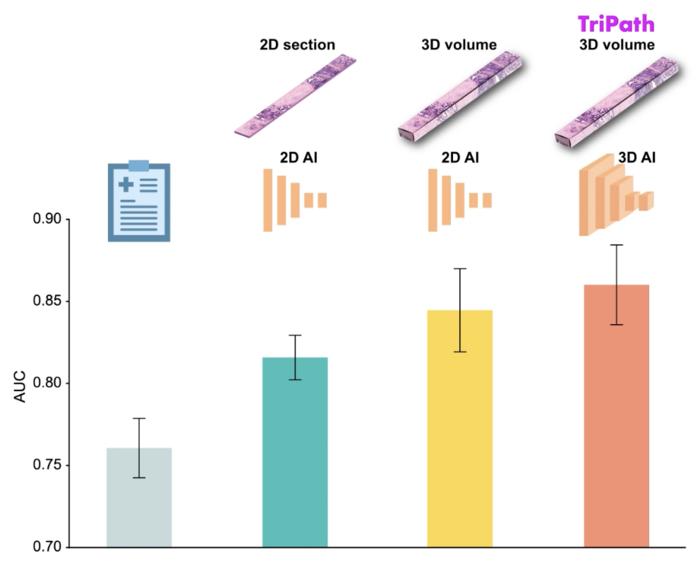

- Researchers developed Tripath to bridge computational gaps to process 3D tissue and predict outcomes based on 3D morphological features

- Cancer recurrence models trained on 3D tissue volumes outperformed models trained on 2D tissue images

Human tissue is intricate, complex and, of course, three dimensional. But the thin slices of tissue that pathologists most often use to diagnose disease are two dimensional, offering only a limited glimpse at the tissue’s true complexity. There is a growing push in the field of pathology toward examining tissue in its three-dimensional form. But 3D pathology datasets can contain hundreds of times more data than their 2D counterparts, making manual examination infeasible.

In a new study, researchers from Mass General Brigham and their collaborators present Tripath: new, deep learning models that can use 3D pathology datasets to make clinical outcome predictions. In collaboration with the University of Washington, the research team imaged curated prostate cancer specimens, using two 3D high-resolution imaging techniques. The models were then trained to predict prostate cancer recurrence risk on volumetric human tissue biopsies. By comprehensively capturing 3D morphologies from the entire tissue volume, Tripath performed better than pathologists and outperformed deep learning models that rely on 2D morphology and thin tissue slices. Results are published in Cell.

While the new approach needs to be validated in larger datasets before it can be further developed for clinical use, the researchers are optimistic about its potential to help inform clinical decision making.

“Our approach underscores the importance of comprehensively analyzing the whole volume of a tissue sample for accurate patient risk prediction, which is the hallmark of the models we developed and only possible with the 3D pathology paradigm,” said lead author Andrew H. Song, PhD, of the Division of Computational Pathology in the Department of Pathology at Mass General Brigham.

“Using advancements in AI and 3D spatial biology techniques, Tripath provides a framework for clinical decision support and may help reveal novel biomarkers for prognosis and therapeutic response,” said co-corresponding author Faisal Mahmood, PhD, of the Division of Computational Pathology in the Department of Pathology at Mass General Brigham.

“In our prior work in computational 3D pathology, we looked at specific structures such as the prostate gland network, but Tripath is our first attempt to use deep learning to extract sub-visual 3D features for risk stratification, which shows promising potential for guiding critical treatment decisions,” said co-corresponding author Jonathan Liu, PhD, at the University of Washington.

Authorship: In addition to Faisal Mahmood, Mass General Brigham authors include Andrew H. Song, Mane Williams, Drew F.K. Williamson, Guillaume Jaume, Andrew Zhang, Bowen Chen. Additional authors include Sarah S.L. Chow, Gan Gao, Alexander S. Baras, Robert Serafin, Richard Colling, Michelle R. Downes, Xavier Farré, Peter Humphrey, Clare Verrill, Lawrence D. True, Anil V. Parwani and co-corresponding author Jonathan T.C. Liu.

Disclosures: Song and Mahmood are inventors on a provisional patent that corresponds to the technical and methodological aspects of this study. Liu is a co-founder and board member of Alpenglow Biosciences, Inc., which has licensed the OTLS microscopy portfolio developed in his lab at the University of Washington.

Funding: Authors report funding support from the Brigham and Women’s Hospital (BWH) President’s Fund, Mass General Hospital (MGH) Pathology, the National Institute of General Medical Sciences (R35GM138216), Department of Defense (DoD) Prostate Cancer Research Program (W81WH-18-10358 and W81XWH-20-1-0851), the National Cancer Institute (R01CA268207), the National Institute of Biomedical Imaging and Bioengineering (R01EB031002), the Canary Foundation, the NCI Ruth L. Kirschstein National Service Award (T32CA251062), the Leon Troper Professorship in Computational Pathology at Johns Hopkins University, UKRI, mdxhealth, NHSX, and Clarendon Fund.

Paper cited: Song AH et al. “Analysis of 3D pathology samples using weakly supervised AI” Cell DOI: 10.1016/j.cell.2024.03.035

Journal

Cell

Method of Research

Experimental study

Subject of Research

Cells

Article Title

Analysis of 3D Pathology Samples using Weakly Supervised AI

Article Publication Date

9-May-2024

COI Statement

A.H.S. and F.M. are inventors on a provisional patent that corresponds to the technical and methodological aspects of this study. J.T.C.L. is a co-founder and board member of Alpenglow Biosciences, Inc., which has licensed the OTLS microscopy portfolio developed in his lab at the University of

Washington.