In a groundbreaking advancement poised to redefine the boundaries of computational capability, researchers at Peking University have unveiled a revolutionary hardware system designed to tackle some of the most intractable problems in computer science—namely, NP-complete challenges that underpin critical real-world applications such as routing, scheduling, and network design. These problems have historically proven resistant to conventional computing methods due to their exponential complexity and the inherent limitations of traditional electronic architectures.

As silicon-based processors approach physical limits, with transistors shrinking to sizes where quantum effects disrupt their operation, the classical Turing machine framework—long the foundation of digital computation—faces severe constraints. The sequential and linear processing model intrinsic to Turing machines restricts the possibility of truly parallel data manipulation at scales necessary for efficiently solving NP-complete problems. This bottleneck has spurred intense research into alternative computational paradigms capable of transcending the barriers posed by physical miniaturization and algorithmic complexity.

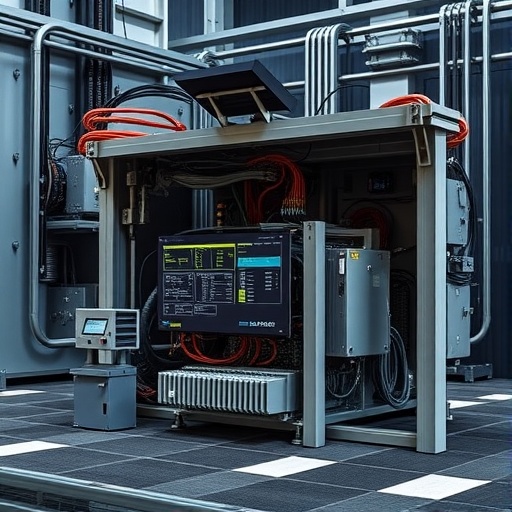

The team led by Professor Jin Xu has introduced an innovative computational model termed the Probe Machine, realized concretely through a hardware implementation called the Electronic Probe Computer (EPC60). Unlike traditional systems, the EPC60 abandons the sequential data processing dogma, adopting instead a multidimensional data representation alongside fully parallel probe operators. This architectural shift enables the simultaneous exploration of multiple solution paths, effectively leveraging massive parallelism to drastically reduce the time required for problem solving.

One of the core innovations lies in the EPC60’s use of specialized probe operators tailored to manipulate graph structures—the fundamental abstraction for NP-complete problems. The system classifies graphs into four distinct structural types and applies targeted operations such as vertex deletion, contraction, edge addition, and the intricate Kempe-change technique. These operations are executed independently and in parallel across numerous subgraphs, ensuring that computational resources are continuously engaged without idle delays.

The hardware design itself is modular and scalable, featuring standardized computing units housed within interlinked cabinets connected via high-speed optical channels. This modularity facilitates seamless system expansion, allowing the EPC60 to tackle increasingly large and complex problem instances by simply augmenting its computational fabric. This scalability is a vital attribute that addresses the burgeoning demand for high-performance computation in diverse industrial domains.

Benchmark results underscore the transformative potential of this architecture. EPC60 successfully resolved 3-coloring problems on graphs with up to 2,000 vertices, achieving flawless accuracy in under an hour—a feat unmatched by leading commercial solvers such as Gurobi. In direct comparisons, Gurobi’s accuracy plateaued at a mere 5–6% even after excessive runtimes exceeding 12 hours, while EPC60 consistently found valid solutions in dramatically reduced timeframes.

A particularly noteworthy achievement involved a challenging graph instance that eluded Gurobi’s solver across a 15-day computational trial. EPC60, by contrast, identified valid graph colorings in less than one minute, exemplifying its capacity to surmount problems that have long been considered computationally prohibitive. Such performance gains hint at a paradigm shift in how complex combinatorial problems can be tackled, moving away from incremental software optimizations toward fundamentally novel hardware-driven exploration.

Professor Xu emphasizes that the EPC60’s departure from the linear, Turing-based approach enables it to harness fully parallel computations at a scale previously unattainable. Its multidimensional data organization allows independent probe operators to execute concurrently without interference, effectively eliminating bottlenecks associated with sequential data processing. This architectural ingenuity embodies a new class of computing capable of addressing fundamental challenges in computational intelligence.

The implications of EPC60’s capabilities extend far beyond academic curiosity. Potential applications span sectors reliant on solving large, complex optimization problems—including supply chain logistics, circuit design, and telecommunications network configuration. By providing a general-purpose, hardware-based solver that significantly outperforms traditional software methods, EPC60 promises to accelerate innovation and operational efficiencies across these domains.

Looking ahead, the research team plans to further enhance the EPC architecture, expanding its scale and integrating it within high-performance computing environments to facilitate real-time industrial deployments. Such integration aims to capitalize on the device’s inherent parallelism and modularity, embedding its capabilities directly into workflows where rapid and accurate NP-complete problem solving is essential.

The realization of the Probe Machine concept as a tangible, scalable computing platform represents a profound milestone in computational research. It challenges long-held assumptions about the limits of classical computing and paves the way for new methodologies centered around parallelism and dimensionality in data processing. This breakthrough could herald an era where previously intractable problems become tractable, thereby unlocking new frontiers in science and industry.

In a broader sense, the development of EPC60 underscores the necessity of reimagining computational frameworks as physical and architectural limitations of existing technologies become increasingly pronounced. As traditional processor improvements plateau, halting Moore’s Law’s relentless advance, innovations like the EPC60 represent a vital frontier in the quest for more powerful, efficient, and versatile computation platforms.

Ultimately, the advances spearheaded by Professor Jin Xu and his team offer a compelling vision for the future of computing: one in which hardware and architecture co-evolve to transcend theoretical constraints, enabling faster, more accurate solutions to the complex problems that define our modern world.

Subject of Research: People

Article Title: A special machine for solving NP-complete problems.

Web References: http://dx.doi.org/10.1016/j.fmre.2025.05.010

Image Credits: Jin Xu, et al

Keywords: Mathematics, Statistics, Algorithms