In an era where early and accurate diagnosis dictates the success of cancer treatment, a groundbreaking study has unveiled a pioneering multimodal fusion model designed to enhance risk prediction in ductal carcinoma in-situ (DCIS). Published in BMC Cancer, this research represents a significant leap forward in integrating advanced imaging techniques, deep learning algorithms, and clinical data to support individualized clinical decision-making.

Ductal carcinoma in-situ, a non-invasive precursor to invasive breast cancer, presents a diagnostic challenge due to its heterogeneous nature and the difficulty in predicting microinfiltration—a subtle form of early invasive behavior that dramatically influences prognosis and treatment strategy. Addressing this challenge, the research team led by Yao et al. constructed a hybrid model that combines the strengths of deep learning (DL), radiomics, and clinical features, aiming to surpass the limitations encountered by unimodal diagnostic models.

Central to the study was the construction and validation of a multi-layered model using a comprehensive multicenter cohort of 232 patients. This cohort was meticulously partitioned into training, validation, and external testing subsets, facilitating robust model development and unbiased performance assessment. The training set, comprising 103 patients, provided the foundational data for model tuning, while the validation (43 patients) and external test sets (86 patients) ensured the model’s generalizability and resilience across different clinical environments.

One of the study’s most striking findings was the demonstration of significant overfitting in unimodal deep learning models when tested externally. For instance, a DenseNet201 model yielded a high area under the curve (AUC) of 0.85 during training but plummeted to 0.47 in the external test, signaling instability and poor replication potential in diverse clinical settings. This overfitting phenomenon underscored the necessity for integrating other data modalities to bolster predictive robustness.

In contrast, the proposed multimodal fusion model achieved superior performance metrics, with an impressive training set AUC of 0.925 and an external test set AUC reaching 0.801. Statistical comparison using the DeLong test confirmed the multimodal model’s significant outperformance over unimodal counterparts, maintaining robustness and predictive accuracy across heterogeneous patient cohorts. This corroborates the hypothesis that diverse data sources synergistically enhance model reliability.

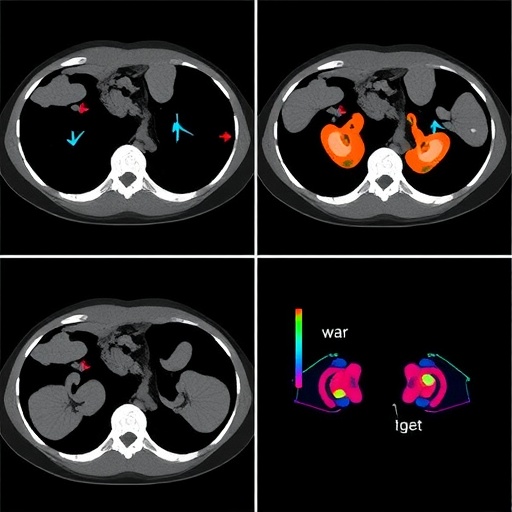

The model’s design incorporated hierarchical fusion strategies, effectively merging peri-tumor imaging histology with dual-view deep learning inputs, encompassing both clinical and radiomic features. This hierarchical integration enables the capture of nuanced spatial heterogeneity surrounding tumor regions, which is crucial for detecting subtle microinfiltrative patterns invisible to conventional imaging analyses. By harnessing imaging data at multiple scales and perspectives, the model leverages complementary information to refine its predictive capabilities.

Beyond statistical performance, interpretability was a pivotal focus for the researchers. Using Gradient-weighted Class Activation Mapping (Grad-CAM), the model’s attention regions were visualized, revealing substantial overlap (81%) with radiologist-annotated zones. This alignment not only instills trust in the algorithmic decision-making process but also facilitates clinician engagement by visually linking computational outputs with familiar diagnostic landmarks.

Calibration of the model’s predictive probabilities further demonstrated its clinical reliability. Hosmer-Lemeshawn tests indicated no significant deviation from ideal calibration (p > 0.05), implying that predicted risks closely matched observed outcomes. Such reliable calibration is essential for clinical adoption, as it ensures that risk scores can be confidently used in treatment planning without overstating or understating patient risk.

To evaluate real-world utility, decision curve analysis (DCA) was employed, revealing a notable net clinical benefit of the multimodal model over conventional approaches. The model produced net benefit differences ranging from 7% to 28% across risk thresholds from 5% to 80%, highlighting its potential to improve patient outcomes by guiding treatment decisions more effectively and potentially reducing overtreatment.

The study’s implications resonate strongly within precision oncology, suggesting that integrated computational frameworks can overcome the inherent variability and complexity of cancer biology. By embedding heterogeneous data inputs into a cohesive analytic pipeline, the model provides clinicians with a refined tool for assessing the subtle progression risks of DCIS, ultimately facilitating more personalized, timely interventions.

This multidisciplinary approach, spanning radiomics, advanced DL architectures, and clinical data analytics, exemplifies the future trajectory of oncologic diagnostics. The hierarchical fusion model not only enriches diagnostic accuracy but also enhances interpretability—a dual necessity in medical AI applications where actionable insights must be both reliable and comprehensible.

Moreover, this research opens avenues for extending similar fusion strategies to other cancer types and complex diseases characterized by spatial and biological heterogeneity. The combination of multimodal imaging, patient-specific clinical markers, and AI-driven pattern recognition stands as a promising paradigm for revolutionizing disease characterization and guiding tailored therapies.

Despite the promising findings, the authors acknowledge the importance of further validation in larger, more diverse cohorts and the need for prospective studies to ascertain clinical impact in real-world settings. Integrating this model into existing healthcare workflows will require addressing computational resource demands and ensuring streamlined interfaces for end-users.

Looking forward, this study acts as a testament to the transformative potential of combining deep learning with radiomics and clinical insights. It underlines the necessity of transcending unimodal approaches and embracing complex, multi-factorial data ecosystems to tackle intricate diagnostic challenges like microinfiltration prediction in DCIS.

As computational power continues to grow and imaging modalities become increasingly sophisticated, the fusion of diverse data streams into unified predictive systems promises to push the boundaries of early cancer detection and personalized treatment planning.

In sum, the research presented by Yao and colleagues marks a milestone in cancer diagnostics, showcasing a high-performing, interpretable multimodal fusion model that directly addresses the pitfalls of unimodal deep learning systems. By offering improved risk prediction for DCIS microinfiltration, this model stands to guide clinicians toward more informed decision-making, ultimately improving patient outcomes in breast cancer care.

The study’s innovation rests not only in its technical achievement but also in its demonstration of the clinical feasibility of merging complex computational methods with traditional medical expertise—heralding a new chapter in AI-assisted oncology diagnostics.

Subject of Research:

Prediction of intraductal cancer microinfiltration in ductal carcinoma in-situ (DCIS) through multimodal data fusion combining peri-tumor imaging histology, dual-view deep learning, radiomics, and clinical features.

Article Title:

Prediction of intraductal cancer microinfiltration based on the hierarchical fusion of peri-tumor imaging histology and dual view deep learning.

Article References:

Yao, G., Huang, Y., Shang, X. et al. Prediction of intraductal cancer microinfiltration based on the hierarchical fusion of peri-tumor imaging histology and dual view deep learning. BMC Cancer 25, 1564 (2025). https://doi.org/10.1186/s12885-025-15054-3

Image Credits: Scienmag.com

DOI: https://doi.org/10.1186/s12885-025-15054-3