In the rapidly evolving intersection of cognitive science and artificial intelligence, a pivotal investigation has emerged examining how both humans and advanced large language models (LLMs) encode and understand the structure of sentences. This study, featuring a diverse participant base and sophisticated AI technologies, unveils profound insights into the latent representations of tree structures within linguistic constructs. Such representations are fundamental as they illuminate the cognitive processes underpinning language comprehension and production for both human beings and machines, thereby bridging the gap in our understanding of these complex systems.

At the core of this research is a one-shot learning task designed to challenge participants and LLMs, such as ChatGPT, to discern and manipulate sentence structure. A total of 372 individuals, comprising native speakers of English and Chinese along with bilingual participants, engaged in the task alongside high-performing language models. The objective was to test their ability to predict which words from sentences could be deleted without compromising the overall meaning, a task that requires an intrinsic grasp of syntactic relationships and hierarchical sentence organization.

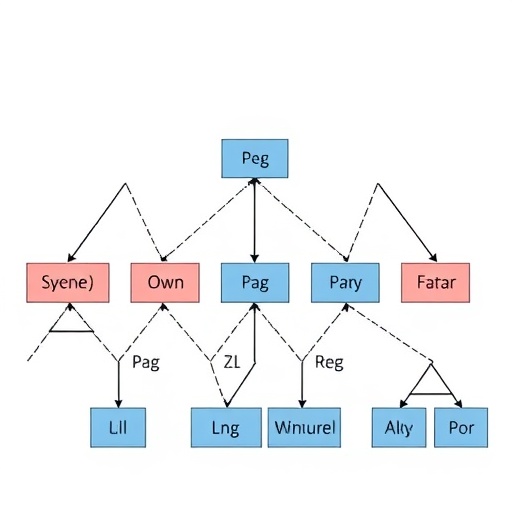

The findings reveal an interesting trend where both groups exhibit a tendency to delete certain constituents—grammatical units that contribute to the overall structure and meaning of sentences—while avoiding the removal of non-constituent strings of words. This behavior highlights a fundamental aspect of linguistic understanding that transcends mere reliance on word frequency or positional information within a sentence. Instead, both humans and LLMs seem to leverage an innate grasp of tree-like structures that underpin language.

Through careful analysis of which words were discarded in the task, researchers were able to reconstruct the underlying constituency tree structures that both groups relied upon. This not only speaks to the sophistication of human cognitive processes but also suggests that large language models are capable of encoding and utilizing similar tree-structured representations in their sentence understanding and generation. This convergence raises critical questions about the nature of language processing in humans versus artificial intelligence and challenges pre-existing models that fail to account for such complexities.

The implications of these findings extend beyond mere academic curiosity. By establishing that constituency tree structures are actively employed by both humans and LLMs, this research paves the way for more nuanced approaches in the development of AI systems that align more closely with human-like language processing. It also invites further exploration into how language is fundamentally structured in the brain, potentially offering insights that could enhance educational methodologies and AI training protocols.

Moreover, the research raises ethical considerations surrounding the use and understanding of AI in linguistic tasks. As LLMs become increasingly integral to our daily lives, an understanding of how they mimic human-like processing can inform safeguards against miscommunication and ensure that AI systems serve to complement human capabilities rather than replace them. This research thus acts as a critical touchstone, illuminating the intricate connections between human cognition and machine learning.

As researchers continue to probe the depths of language processing, the parallels drawn between human cognition and the abilities of LLMs present exciting possibilities for future AI developments. There remains much to explore in the realms of syntax, semantics, and the neural underpinnings of language that could guide both cognitive science research and advancements in AI technology. Understanding these relationships within structural frameworks may redefine our approach to language acquisition and the deployment of AI in communication settings.

Ultimately, this pioneering study opens a host of new avenues for inquiry within linguistics, cognitive science, and artificial intelligence. By demonstrating that both humans and LLMs generate and interpret language rooted in complex tree structures, researchers have taken an essential step toward unraveling the mysteries of linguistic representation. As such insights continue to build and evolve, they promise to deepen our comprehension of not only how language operates but also how emerging technologies can effectively bridge the gap between human communication and machine understanding.

Continued exploration in this domain could yield important innovations in educational technologies, language processing applications, and AI-enhanced communication platforms. The potential to develop systems that rival human linguistic abilities is within reach, suggesting a future where seamless interaction between humans and machines becomes a natural occurrence.

The convergence of human and AI capabilities indicates not only a fascinating scientific endeavor but also a significant leap toward integrating AI into everyday tasks, enriching communication, and fostering deeper understanding across diverse languages and cultures. This study exemplifies how interdisciplinary research can create synergies between cognitive science and artificial intelligence, leading to groundbreaking revelations about language representation and processing.

In conclusion, the research by Liu, Xiang, and Ding forms a compelling narrative about the shared linguistic capabilities of humans and LLMs, urging further examination of tree-structured representations. This compelling evidence establishes a robust framework that enhances both theoretical and applied linguistics, revolutionizing how we comprehend and harness the power of language across varying modalities. The journey to fully understand the complexities of human and machine language processes is far from over, but this foundational work sets a powerful precedent for future discoveries.

Subject of Research: Tree-structured sentence representations in humans and large language models.

Article Title: Active use of latent tree-structured sentence representation in humans and large language models.

Article References:

Liu, W., Xiang, M. & Ding, N. Active use of latent tree-structured sentence representation in humans and large language models. Nat Hum Behav (2025). https://doi.org/10.1038/s41562-025-02297-0

Image Credits: AI Generated

DOI: 10.1038/s41562-025-02297-0

Keywords: language processing, large language models, cognitive science, sentence structure, constituency trees, artificial intelligence.