Growing interest has been observed in foundation models in recent years, which can be attributed to their sufficient pre-training on web-scale datasets and their superior ability to generalize to various downstream tasks. Not long after, ChatGPT, empowered by the GPT foundation model, has become a great commercial success, owing to its real-time and reasonable language generation and user interaction. Going back to the vision realm, the exploration of foundation models is still in its infancy. The pioneering work of contrastive language-image pretraining (CLIP) effectively combines image-text modalities, enabling zero-shot generalization to novel visual concepts. However, its generalization ability for vision tasks remains unsatisfactory due to the scarcity of abundant training data, unlike in natural language processing (NLP).

Credit: Beijing Zhongke Journal Publising Co. Ltd.

Growing interest has been observed in foundation models in recent years, which can be attributed to their sufficient pre-training on web-scale datasets and their superior ability to generalize to various downstream tasks. Not long after, ChatGPT, empowered by the GPT foundation model, has become a great commercial success, owing to its real-time and reasonable language generation and user interaction. Going back to the vision realm, the exploration of foundation models is still in its infancy. The pioneering work of contrastive language-image pretraining (CLIP) effectively combines image-text modalities, enabling zero-shot generalization to novel visual concepts. However, its generalization ability for vision tasks remains unsatisfactory due to the scarcity of abundant training data, unlike in natural language processing (NLP).

More recently, Meta AI Research released a promptable segment anything model (SAM). By incorporating a single user interface as prompt, SAM is capable of segmenting any object in any image or any video without additional training, which is often referred to as zero-shot transfer in the vision community. As suggested by the authors, SAM′s capabilities are driven by a vision foundational model that has been trained on a massive SA-1B dataset containing more than 11 million images and one billion masks. Meanwhile, the authors have released an impressive online demo to showcase SAM′s capabilities at SAM is designed to generate a valid segmentation result for any prompt, where prompts can include foreground/background points, a rough box or mask, freeform text, or any other information indicating what to segment in an image. The latest project offers three prompt modes: click mode, box mode and everything mode. Click mode allows users to segment objects with one or more clicks, either including and excluding them from the object. Box mode allows for object segmentation by roughly drawing a bounding box and using alternative click prompts. Everything mode automatically identifies and masks all objects in an image.

The emergence of SAM has undoubtedly demonstrated strong generalization across various images and objects, opening up new possibilities and avenues for applications in intelligent image analysis and understanding, such as augmented reality and human computer interaction. Some practitioners from both industry and academia have gone so far as to assert that “segmentation has reached its endpoint” and “the computer vision community is undergoing a seismic shift”. Actually, a dedicated dataset for pre-training is hard to encompass the vast array of unusual real-world scenarios and imaging modalities, particularly for computer vision community with a variety of conditions (e.g., low-light, bird′s-eye view, fog, rain), or employing various input modalities (e.g., depth, infrared, event, point cloud, CT, MRI), and with numerous real-world applications. Thus, it is of great practical interest to investigate how well SAM can infer or generalize under different scenarios and applications.

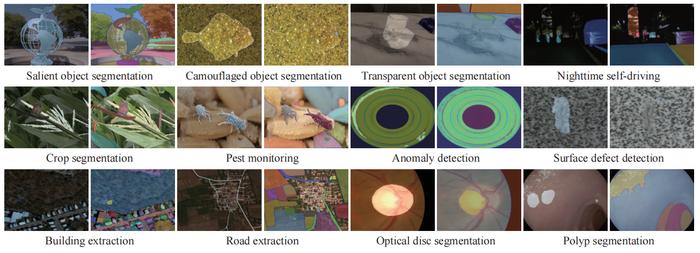

This leads to carry out this study, examining SAM′s performance across a diverse range of real-world segmentation applications, as illustrated in Fig. 1. Specifically, researchers employ SAM in various practical scenarios, including natural image, agriculture, manufacturing, remote sensing and healthcare. Meanwhile, they discuss SAM′s benefits and limitations in practice. Based on these studies, they have made the following observations:

1) Excellent generalization on common scenes. Experiments on various images validate SAM′s effectiveness across different prompt modes, demonstrating its ability to generalize well to typical natural image scenarios, especially when target regions distinct prominently from their surroundings. This emphasizes the superiority of the promptable SAM′s model design and the strength of its massive and diverse training data source.

2) Require strong prior knowledge. During the usage of SAM, researchers observe that for complex scenes, e.g., crop segmentation and fundus image segmentation, more manual prompts with prior knowledge are required, which could potentially result in a suboptimal user experience. Additionally, they notice that SAM tends to favor selecting the foreground mask. When applying the SAM model to shadow detection task, even with a large number of click prompts, its performance remains poor. This may be due to the strong foreground bias in its pre-training dataset, which hinders its ability to handle certain scenarios effectively.

3) Less effective in low-contrast applications. Segmenting objects with similar surrounding elements is considered challenging scenarios, especially when dealing with transparent or camouflaged objects that are “seamlessly” embedded in their surroundings. Experiments reveal that there is considerable room for exploring and enhancing SAM′s robustness in complex scenes with low-contrast elements.

4) Limited understanding of professional data. Researchers apply SAM to real-world medical and industrial scenarios and discover that it produces unsatisfactory results for professional data, particularly when using box mode and everything mode. This reveals SAM′s limitations in understanding these practical scenarios. Moreover, even with click mode, both the user and the model are required to possess certain domain-specific knowledge and understanding of the task at hand.

5) Smaller and irregular objects can pose challenges for SAM. Remote sensing and agriculture present additional challenges, such as irregular buildings and small-sized streets captured from the aerial imaging sensors. These complexities make it challenging for SAM to produce complete segmentation. How to design effective strategies for SAM in such cases is still an open issue.

This study examines SAM′s performance in various scenarios, and provides some observations and insights toward promoting the development of foundational models in vision realm. While researchers have tested many tasks, not all downstream applications have been covered. A multitude of fascinating segmentation tasks and scenarios are encouraged to be explored in future research.

See the article:

Ji, W., Li, J., Bi, Q. et al. Segment Anything Is Not Always Perfect: An Investigation of SAM on Different Real-world Applications. Mach. Intell. Res. (2024).

Journal

Machine Intelligence Research

Article Title

Segment Anything Is Not Always Perfect: An Investigation of SAM on Different Real-world Applications

Article Publication Date

12-Apr-2024