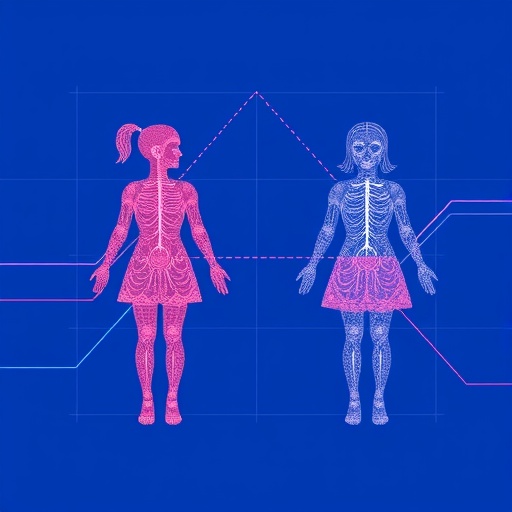

In recent years, the integration of artificial intelligence and machine learning into healthcare has ushered in a new era of possibilities and challenges. One particularly controversial and thought-provoking area of this integration is the inference of gender from electronic health records (EHR). A study by Gronsbell et al., titled “When algorithms infer gender: revisiting computational phenotyping with electronic health records data,” sheds significant light on this issue. The implications of algorithmically derived gender classifications span ethical, medical, and social dimensions, demanding urgent discussion among clinicians, researchers, and policymakers alike.

Gender inference in the context of EHR might sound like a technical nuance, but its implications are far-reaching. Historically, medical research has often taken a binary approach to gender—treating it as either male or female. However, nuances in gender identity, expression, and experience complicate this binary view. The traditional methods of collecting gender data in medical records do not always reflect the complexities of human identity. With algorithms now able to process vast amounts of EHR data in ways that human analysts cannot, we must critically examine how these technologies may misrepresent or oversimplify gender information.

The research presented by Gronsbell et al. indicates that many algorithms rely heavily on specific variables within EHR to classify gender, often defaulting to binary categories. This reliance can lead to inaccuracies in understanding patient demographics and their medical needs. It raises pertinent questions about how health disparities might be magnified when these algorithms fail to account for non-binary, transgender, and gender-nonconforming individuals. The research highlights the pressing need for more inclusive data practices that reflect the complexities of gender identity.

The methodology employed by Gronsbell and colleagues is particularly noteworthy. They delve into the specific algorithms used in computational phenotyping, looking at how these systems classify gender based on health data. Their analysis reveals a concerning trend: many systems utilize historical data that may be inaccurate due to outdated understandings of gender. For instance, if an algorithm is trained on datasets that predominantly feature binary gender classifications, it may struggle to accurately interpret or include data on non-binary patients, potentially leading to a generation of healthcare solutions that do not consider their specific needs.

Given the potential for misclassification, the authors urge healthcare providers and technologists to reconsider the design of algorithms used for gender inference. Their findings emphasize the importance of creating more robust models that can accommodate multiple gender identities. This could involve integrating qualitative data—such as self-reported gender identity—in addition to the quantitative data captured in health records. Such a paradigm shift not only improves patient care but also promotes a more accurate representation of diverse patient populations.

Moreover, the implications of inaccurate gender inference extend beyond individual patient care. Public health statistics rely heavily on demographic data to allocate resources, evaluate health outcomes, and inform policy decisions. If data concerning gender is flawed due to algorithmic misclassification, entire populations can be underserved. For example, well-documented health disparities exist across gender lines, and when data fails to capture the complexity of these differences, interventions may miss critical opportunities to address specific healthcare needs.

The challenge of algorithmic transparency is another critical dimension discussed in the study. As these technologies become more integrated into healthcare systems, understanding how algorithms make decisions becomes crucial. The authors advocate for greater transparency in the design of these algorithms, emphasizing the need for open dialogues between data scientists, healthcare providers, and the communities affected by these algorithms. This would not only foster trust but also ensure that the systems are designed with consideration for all potential patient identities.

In addition to advocacy for transparency, Gronsbell et al. emphasize the importance of interdisciplinary collaboration. The intersection of technology, healthcare, and social sciences presents a unique opportunity to reconcile the complexities of gender in medical data. By collaborating across these domains, stakeholders can better identify potential biases in algorithms and work towards creating more equitable health practices. Ethicists, sociologists, and medical professionals must work together to ensure that solutions reflect the realities of patients’ lives, rather than outdated or simplistic models.

The study also opens up discussions on the role of regulatory frameworks in governing the use of AI in healthcare. Currently, there is a lack of standardized guidelines on how to ethically and effectively implement AI technologies in ways that respect patient identity. The authors call for the establishment of comprehensive regulatory frameworks that prioritize the accuracy and ethical implications of gender inference algorithms. Such regulations could set standards for data collection, algorithm design, and patient consent, ultimately contributing to a more equitable healthcare system.

Privacy concerns also emerge as a significant ethical issue surrounding gender inference in healthcare. Utilizing EHR data for algorithm training often raises questions about patient consent and confidentiality. The authors highlight the necessity for stringent privacy measures, ensuring that individuals have control over how their data is used in algorithmic models. Developing responsible data governance frameworks will be essential in navigating these ethical waters and maintaining patient trust in healthcare systems.

As the discourse surrounding gender and data continues to evolve, the voices of undervalued communities must be amplified. It is not only crucial that algorithms are designed to be inclusive, but that they also incorporate feedback and experiences from gender-diverse groups. The risk of alienating these communities through poorly designed algorithms can lead to a cycle of misinformation and inequitable healthcare outcomes. Engaging with these communities actively will help foster an inclusive environment where their health needs are thoughtfully considered.

Ultimately, the research by Gronsbell et al. underscores a significant tension in modern healthcare: the balance between using technology to enhance patient care and the risk of reinforcing stereotypes and biases. As AI technologies become more pervasive, there is a pressing need to ensure that they are developed with a nuanced understanding of the populations they serve. Fostering an environment of continuous learning, where healthcare providers remain responsive to the evolving nature of gender identity, is vital to achieving equity in health.

The coming years will likely see a deeper integration of AI into healthcare, making it imperative that discussions surrounding gender inference remain a focal point. The insights gleaned from Gronsbell’s work will be invaluable in shaping how algorithms are constructed and applied in the future. It is clear that a collective effort involving healthcare professionals, technologists, ethicists, and the communities affected is essential to navigate the complexities of gender categorization in a way that promotes dignity, accuracy, and equitable health outcomes for all. As we move forward, the lessons learned from this research can help inform the ethical application of AI technologies in healthcare, ensuring that algorithms serve to uplift rather than marginalize.

The study “When algorithms infer gender” serves as a crucial reminder of the responsibility that comes with the advancement of AI in healthcare. As we continue to innovate, we must remain vigilant in our commitment to fairness and inclusivity, striving for a healthcare landscape that genuinely reflects the diversity of human experience.

Subject of Research: Gender inference in electronic health records and its implications in healthcare.

Article Title: When algorithms infer gender: revisiting computational phenotyping with electronic health records data.

Article References:

Gronsbell, J., Thurston, H., Dong, L. et al. When algorithms infer gender: revisiting computational phenotyping with electronic health records data.

Biol Sex Differ (2025). https://doi.org/10.1186/s13293-025-00783-8

Image Credits: AI Generated

DOI: 10.1186/s13293-025-00783-8

Keywords: Gender inference, electronic health records, AI in healthcare, computational phenotyping, healthcare equity.