In an era where artificial intelligence dazzles with its apparent mastery over language and complex problem-solving, a surprising vulnerability persists—reasoning through certain abstract puzzles. Researchers at the University of Washington have ingeniously leveraged this weakness to help children develop critical thinking skills about AI’s capabilities by designing a novel educational game called AI Puzzlers. This interactive tool engages young minds in solving the Abstraction and Reasoning Corpus (ARC) puzzles, revealing the limits of current generative AI and fostering a healthy skepticism toward machine-generated answers.

Generative AI chatbots often respond with an air of certainty, even when their outputs contain inaccuracies or outright errors. This confident misinformation has become a growing concern, as it can be more persuasive than human explanations. Adults, even professionals with domain expertise such as lawyers, frequently fall prey to AI hallucinations. For children, this challenge is even more pronounced because their contextual knowledge is limited, making it harder to discern AI’s falsehoods from facts. AI Puzzlers confront this issue head-on by exposing kids to a domain where AI consistently falters—reasoning about abstract visual patterns.

The underpinning foundation of the game revolves around ARC puzzles, a set of challenges created in 2019 specifically to test abstract reasoning. Each puzzle requires players to observe a sequence of colored block patterns and then deduce the underlying rule to generate a correct output for a new pattern. Unlike many AI benchmarks focused on language or standard vision tasks, ARC puzzles demand high-level abstraction—a kind of intuition where the player generalizes learned concepts to new situations. While recent AI models have made strides in such puzzles, they still lag behind even young humans, who can often solve these efficiently with far less data or training.

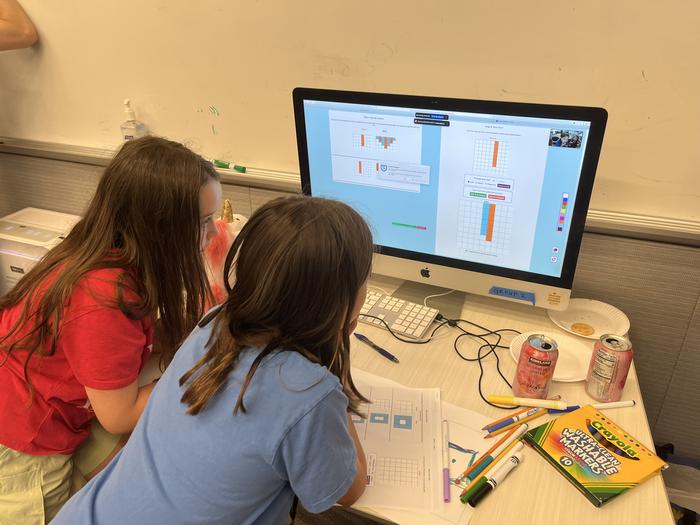

AI Puzzlers incorporates a collection of twelve such ARC puzzles, offering users not only the chance to solve them themselves but also to challenge several AI models to do the same. The game’s interface allows players to select different AI chatbots from a drop-down menu, invoke their solutions, and crucially, request textual explanations of how the AI arrived at its answers. Consistently, the AI explanations fall short, revealing logical gaps or misunderstandings despite sometimes achieving the correct solution. This discrepancy is pedagogically valuable, helping children detect where AI reasoning breaks down.

A key innovation is the game’s “Assist Mode,” inspired directly by co-design sessions with participating children. In this interactive mode, kids provide iterative hints or constraints to guide the AI towards a valid solution. Initially, children’s hints were often imprecise or metaphorical — such as likening a pattern to a “doughnut” — which the AI struggled to interpret. Over sessions, children refined their inputs to become more specific and systematic, guiding the AI in a stepwise manner. This dynamic interaction transformed the AI from an opaque oracle into a partner requiring mentorship, cultivating the understanding that AI systems need human guidance rather than offering infallible answers.

The developmental and educational impact of AI Puzzlers was rigorously tested with over one hundred children ranging from grades 3 to 8 during the UW College of Engineering’s Discovery Days, along with focused sessions involving KidsTeam UW, a longstanding group dedicated to children-led design collaboration. This diverse demographic, spanning six to eleven years of age, not only enjoyed the intuitive, language-agnostic nature of the puzzles but also rapidly acquired a nuanced differentiation between human and machine cognition. Children remarked keenly on the contrast between AI’s reliance on statistical pattern matching and the creative problem-solving abilities of their own minds.

Lead author Aayushi Dangol, a doctoral student specializing in human-centered design and engineering, underscored how ARC puzzles naturally attract children regardless of background or reading ability because they rely entirely on visual pattern recognition. The satisfaction of mastering a puzzle followed by witnessing AI’s failure breeds a form of digital humility and inquiry. This hands-on experience pushes back against the prevalent narrative that machines are universally “smarter” or “all-knowing,” allowing learners to internalize critical reasoning skills in a playful environment.

The research findings, unveiled at the Interaction Design and Children 2025 conference in Reykjavik, Iceland, highlight a promising new frontier in AI literacy. While AI has infiltrated many facets of daily life, from generating text and images to aiding medical diagnoses, its reasoning pipelines remain brittle and error-prone. By directly confronting these limitations through gamified learning, educators can empower youth to interrogate AI outputs rather than passively accepting them. This reflective stance is crucial as generative AI technologies continue to proliferate across educational, social, and commercial domains.

Moreover, the iterative co-design process with children led to unexpected yet effective system features, such as the Assist Mode, showcasing the value of involving end-users—especially young learners—in technology development. Co-senior author Jason Yip emphasized that children’s direct involvement in shaping AI interaction paradigms enhances both usability and educational outcomes. The research team discovered that young users possess a remarkable capacity not only to recognize flaws in AI reasoning but to engage constructively to rectify these flaws, effectively turning AI from a black box into a transparent tool.

The research also reveals a broader insight: children can often be more skeptical of AI than adults, precisely because they have fewer preconceived notions about AI’s infallibility. This counters the common assumption that youth are gullible users of technology. Instead, when provided with appropriate frameworks and experiences, children become sophisticated evaluators of AI-generated content. The researchers’ work thus advocates for integrating similar AI literacy experiences throughout early education curricula to prepare the next generation for an AI-augmented world.

Financial support for this research was provided by the U.S. National Science Foundation, the Institute of Education Sciences, and the Jacobs Foundation’s CERES Network. The project exemplifies an interdisciplinary collaboration bridging computer science, human-centered design, and education, positioning itself at the forefront of responsible AI development. By creating environments where children can tangibly critique and contribute to AI reasoning, the researchers not only advance academic understanding but also contribute to shaping ethical AI literacy.

In a landscape where generative AI continues to captivate global attention with its seemingly boundless potential, innovations like AI Puzzlers are quintessential reminders of the ongoing journey toward meaningful human-machine symbiosis. Providing children with the tools and opportunities to critically evaluate AI outputs does not merely protect them from misinformation; it equips them to become thoughtful co-creators of AI’s future evolution.

Subject of Research: Using ARC puzzles and AI interaction to foster children’s critical reasoning about generative AI errors.

Article Title: “AI just keeps guessing”: Using ARC Puzzles to Help Children Identify Reasoning Errors in Generative AI

News Publication Date: 25-Jun-2025

Web References:

– https://dx.doi.org/10.1145/3713043.3728836

– https://www.engr.washington.edu/about/k12/discovery-days

– https://www.kidsteam.ischool.uw.edu/

Image Credits: University of Washington

Keywords: Generative AI, Education technology