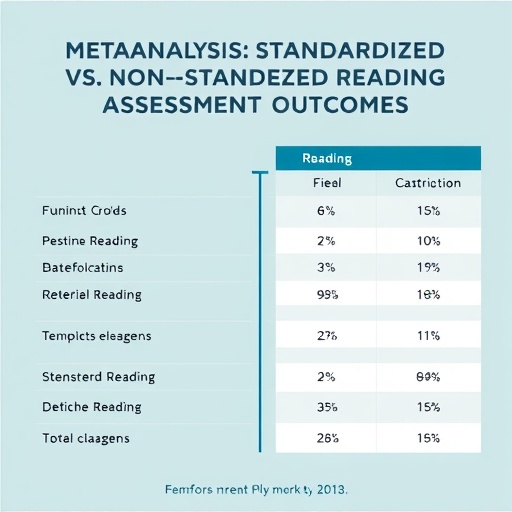

The landscape of reading comprehension assessment has undergone significant scrutiny over the past few decades, with researchers increasingly focusing on the effectiveness of different assessment methods. A recent meta-analysis conducted by Hansford, Garforth, McGlynn, and colleagues dives into this intricate sphere, comparing standardized and non-standardized assessment results. Their findings shed light on the various implications of these assessment types, not only for educators but also for policymakers and stakeholders in education. This comprehensive study is timely and crucial, given the evolving nature of education in the 21st century.

Standardized assessments have long been lauded for their objectivity and reliability. These tests, designed to evaluate student performance across a uniform framework, purportedly remove variability and bias in evaluation. Yet, as Hansford and his team have discovered, this perceived strength may also serve as a fundamental weakness. While standardized testing measures specific skill sets, it often fails to capture the nuances of a student’s comprehension beyond rote memorization and recall. As a result, many educators express concerns that these assessments may not wholly reflect a student’s true reading abilities or their potential for critical thinking.

Conversely, non-standardized assessments represent a more flexible approach. These could encompass various methods, such as project-based evaluations, presentations, or teacher-formulated tests. Through this analysis, the researchers highlighted that non-standardized assessments allow for more individualized evaluation that can consider unique contexts and student needs. This kind of assessment fosters a deeper understanding of a pupil’s capabilities, promoting a richer dialogue between educators and students about their learning processes. However, the subjective nature of these evaluations brings its own challenges, particularly concerning consistency in grading across different classrooms.

One of the notable aspects of the Hansford et al. meta-analysis is the depth of data they compiled. By pooling results from multiple studies, they were able to offer a broader perspective on reading comprehension assessments, identifying trends and variances among different populations and educational settings. For instance, the results demonstrated that non-standardized assessments often yield higher engagement levels among students, correlating with improved comprehension outcomes. This trend signals a potential shift in educational methodologies that prioritize student engagement and individualized learning experiences.

The analysis also explored the implications of socio-economic factors on reading comprehension assessments. Particularly, the data showed that standardized testing disproportionately affected students from lower socio-economic backgrounds. Such students may grapple with additional barriers that hinder their performance in standardized assessments, thereby leading to misinterpretations of their comprehension capabilities. On the contrary, non-standardized assessments can adapt to individual student contexts, yielding fairer evaluations that reflect true comprehension levels rather than socio-economic disparities.

Furthermore, the researchers brought attention to the cognitive processes involved in reading comprehension, suggesting that standardized assessments often overlook these intricate dynamics. Reading comprehension is not merely about decoding text; it involves various cognitive skills, including inference, prediction, and summarization. The one-size-fits-all nature of standardized assessments can miss essential elements of these cognitive processes, thus limiting educators’ insights into a student’s abilities. By emphasizing the need for diverse assessment methods, Hansford et al. push forth a poignant argument that a holistic view of comprehension is crucial for student growth.

In terms of educational policy, the implications of this meta-analysis are significant. The current prevalence of standardized testing in many education systems can perpetuate practices that do not serve all students effectively. Policymakers may need to reevaluate the reliance on such assessments. The evidence presented by Hansford and his collaborators provides a compelling case for integrating non-standardized assessment approaches into educational frameworks, thereby encouraging a broader spectrum of measuring comprehension.

Additionally, teachers play a central role in the application of these findings. The meta-analysis encourages educators to critically assess their methods of evaluation and to consider incorporating a mix of standardized and non-standardized assessments in their practice to better capture the full scope of a student’s reading abilities. Teacher training programs could also benefit from integrating these findings, emphasizing the importance of diverse assessment strategies that can meet a wide range of learner needs.

Notably, the study also highlighted the growing influence of technology in education. With the rise of digital assessments and educational tools, there lies a unique opportunity to develop more nuanced assessment methods that can further enhance understanding. The integration of technology in non-standardized assessments, for example, can provide educators with real-time data on student progress, allowing for immediate feedback and adjustments to instruction.

The growing discourse around assessment methodologies signifies a transformative period in educational practices. As institutions consider the implications of the findings presented by Hansford et al., we witness an ongoing dialogue about the most effective means of fostering reading comprehension. In this context, it is imperative that educators remain at the forefront, adapting and evolving their approaches to ensure they resonate with the diverse needs of students. The path forward emphasizes a balanced assessment system that encompasses both standardized and non-standardized tools.

Moreover, the study serves as a catalyst for further research in the field. The insights gained from Hansford and his team’s work can inspire subsequent investigations into effective methodologies for assessing not just reading comprehension but other academic domains as well. The impact of such a comprehensive meta-analysis could lead to an educational renaissance focused on personalized education, an approach that acknowledges and values each student’s unique journey.

In conclusion, the meta-analysis by Hansford, Garforth, McGlynn, and colleagues represents a pivotal contribution to the understanding of assessment methods in education. Their findings propose a reevaluation of current practices and encourage the exploration of varied assessment strategies that more accurately reflect student capabilities. As education continues to evolve, embracing diverse methodologies will be vital for fostering a deeper understanding of complex reading comprehension skills while addressing the broader challenges faced by students in today’s academic landscape.

Subject of Research: Reading comprehension assessment methodologies

Article Title: Reading comprehension: a meta-analysis comparing standardized and non-standardized assessment results

Article References:

Hansford, N., Garforth, K., McGlynn, S. et al. Reading comprehension: a meta-analysis comparing standardized and non-standardized assessment results.

Discov Educ (2026). https://doi.org/10.1007/s44217-026-01140-6

Image Credits: AI Generated

DOI: 10.1007/s44217-026-01140-6

Keywords: reading comprehension, standardized assessment, non-standardized assessment, education, meta-analysis