Computer-generated holography (CGH) stands at the forefront of next-generation three-dimensional (3D) display technologies, offering an unparalleled capacity to produce authentic depth perception through the precise recording and computational simulation of optical fields. Traditional CGH techniques seek to reconstruct target scenes by capturing intensity or amplitude information; however, they grapple with a critical challenge known as the ill-posed inverse problem. This fundamentally arises from the absence of original wavefront phase data in initial hologram calculations, a limitation that impedes accurate and efficient hologram generation.

The longstanding dilemma in CGH methods has been the balancing act between reconstruction fidelity and computational efficiency. Conventional approaches struggle to meet the escalating demands of producing high-quality, full-color naked-eye 3D displays required by contemporary information applications. Recent advancements in artificial intelligence (AI), bolstered by the rapid evolution of graphics processing units (GPUs), have sparked a transformative shift through deep learning-based CGH models. These models promise to transcend the traditional speed versus quality trade-offs that have constrained holographic displays.

Supervised deep learning strategies, while promising, hinge on vast datasets consisting of pairs of target scenes and their pre-computed holograms. This dependency introduces an inherent paradox: because of the ill-posed nature of the underlying inverse problem, generating flawless hologram ground truths for training is nearly impossible. This paradox creates a bottleneck for the advancement of supervised CGH networks, halting their step towards producing holograms of superior fidelity.

In navigating this challenge, unsupervised learning models informed by physical wave optics principles have emerged. These physics-driven approaches uniquely leverage the intrinsic laws that govern optical holography, allowing deep neural networks (DNNs) to generate high-quality holograms without relying on labeled data. Nevertheless, current unsupervised models frequently exhibit limitations; many either generate holograms for a single wavelength, confining them to monochromatic 2D displays, or rely on multiple separately trained models to generate RGB holograms, inflating computational costs and complicating real-time implementation.

The notable research team led by Professors Wei Xiong and Hui Gao at the Wuhan National Laboratory for Optoelectronics, Huazhong University of Science and Technology, has proposed an innovative solution to these limitations. Their novel lightweight unsupervised model, IncepHoloRGB, breaks new ground by enabling simultaneous generation of full high definition (FHD) 1920×1080 resolution holograms in RGB color space through a unified neural network framework capable of supporting both 2D and 3D display modes.

A pivotal element of IncepHoloRGB is its depth-traced superimposition method. This technique ensures consistent spatial relationships among various depth layers by calculating subsequent layer images from the amplitude and phase of the optical field propagated from the previous layer. This clever propagation inherently encodes the near-far depth disparities, enabling the network to construct vivid, multi-depth 3D scenes naturally and without supervised hologram labels.

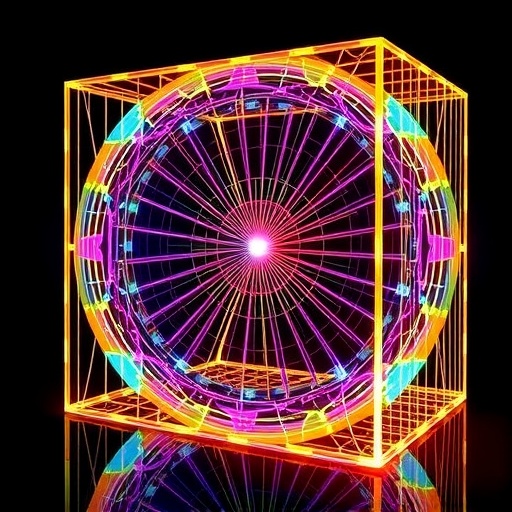

Central to the network’s efficiency and high fidelity is the introduction of the Inception sampling block. Drawing conceptual inspiration from architectures designed for multi-scale feature extraction, this block employs multiple convolutional paths using small kernels—1×1, 2×2, or 3×3—to process features at different scales simultaneously. The extensive use of 1×1 convolutions within these paths significantly reduces computational overhead, circumventing the inefficiency typically caused by large convolutional filters while substantially enhancing the network’s capability to learn detailed holographic representations.

Complementing this architectural innovation, the IncepHoloRGB model incorporates a differentiable multi-wavelength propagation module. This module simultaneously computes diffraction field propagation across red, green, and blue wavelength channels with high computational precision. Training the network involves a hybrid loss function tailored specifically to optimize color perception, further improving its ability to reconstruct lifelike full-color holograms. This sophisticated approach ensures that the system scales elegantly from simulations to empirical experiments, demonstrating exceptional performance in both 2D and 3D holographic displays.

Empirical results verify the remarkable capabilities of IncepHoloRGB. The model achieves structural similarity index (SSIM) scores of 0.88 and peak signal-to-noise ratio (PSNR) values reaching 29.00, all while operating at an impressive 191 frames per second (FPS) during hologram reconstruction. Such efficiency and fidelity underscore the network’s potential to revolutionize real-time dynamic 3D display systems, including applications in virtual and augmented reality (VR/AR), where rapid and high-quality holographic visualization is critical.

This research not only advances the field of computer-generated holography but also exemplifies how marrying deep learning with physical principles can overcome entrenched challenges in optical imaging. By eliminating the reliance on labeled holographic data and facilitating full-color, multi-depth 3D reconstructions within a lightweight framework, IncepHoloRGB sets a new benchmark that could unlock unprecedented capabilities for immersive visualization technologies.

The interdisciplinary Micro & Nano Optoelectronics Laboratory, under the guidance of Professors Xiong and Gao, continues to push boundaries across multiple domains of laser manufacturing and optical field modulation. Their body of work encompasses pioneering techniques in laser 3D/4D micro-nano scale printing, metasurface engineering for optical modulation, and high-precision heterogenous laser processing, making significant strides in the fabricated metamaterial landscape and advancing holographic display technology concurrently.

Over recent years, this vibrant research group has contributed upwards of 120 articles in leading scientific journals such as Science Advances, Nature Communications, and Advanced Materials. They have also secured over 50 patent grants and applications, reflecting the practical impact and commercial potential of their innovations. Supported by numerous prestigious projects from national and provincial science foundations, as well as industry collaborations, their work continues to influence the future trajectory of optical science and engineering.

InceptHoloRGB, by extending computational holography capabilities into real-time, full-color, high-resolution realms, holds promise not only for enhancing conventional display technologies but also for integrating holographic visualization seamlessly into emerging digital ecosystems. The convergence of physics-informed neural network modeling with advanced multi-wavelength optical propagation offers a compelling glimpse into the future of 3D immersive technologies, setting the stage for richer, more interactive digital experiences.

Subject of Research:

Article Title: IncepHoloRGB: multi-wavelength network model for full-color 3D computer-generated holography

News Publication Date: 24-Oct-2025

Web References:

References:

Image Credits: Xuan Yu, Wei Xiong, Hui Gao

Keywords

Computer-Generated Holography, 3D Display Technology, Deep Learning, Unsupervised Neural Networks, Full-Color Holography, Multi-Wavelength Propagation, Inception Sampling Block, Virtual Reality, Augmented Reality, Optical Field Modulation, Wave Optics, Computational Imaging, Real-Time Hologram Reconstruction