In a groundbreaking study published in the journal Discover Artificial Intelligence, researchers Sharma, Singh, and Choudhury delve into the transformative potential of advanced deep learning architectures for enhancing the classification of mammograms. This study brings forth a comprehensive comparative analysis of Convolutional Neural Networks (CNNs) versus Vision Transformers (ViTs), opening new avenues for artificial intelligence in medical imaging. The research presents a meticulous exploration of the performance metrics and operational efficiencies of these two dominant machine learning paradigms, highlighting their implications in clinical settings.

Mammography has long served as the frontline screening technique for breast cancer detection, pivotal in reducing mortality rates through early diagnosis. However, interpreting mammograms remains a complex challenge due to the subtle nature of abnormalities that can often evade even the most trained human eyes. In light of this, the integration of sophisticated deep learning models provides a promising solution to augment medical professionals’ capabilities in accurately identifying potential anomalies in radiological images.

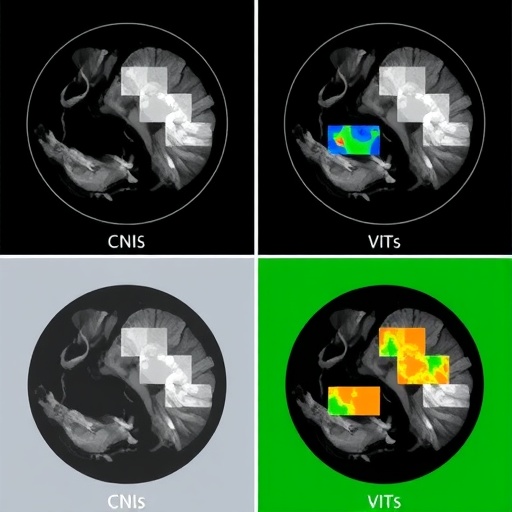

The authors embark upon a detailed description of Convolutional Neural Networks, a staple in image recognition tasks. CNNs operate by automatically detecting patterns in image data, leveraging layers of convolutional filters that progressively extract relevant features. This multi-layered architecture allows CNNs to capture intricate details in mammograms, facilitating the identification of varying shapes and textures indicative of malignant lesions. The efficiency in training these networks hinges on their ability to learn directly from pixel data, rendering CNNs a natural fit for image-focused applications.

On the other side of the spectrum lies Vision Transformers, an architecture that has recently gained traction in the field of computer vision. ViTs eschew the traditional convolutional approach in favor of transformer models that were initially designed for natural language processing tasks. By segmenting images into patches and applying self-attention mechanisms, ViTs can learn long-range dependencies within visual data. This novel approach has been shown to provide competitive performance against CNNs while often requiring significantly less data to achieve optimal results.

In the study, Sharma et al. meticulously compare the performance of CNNs and ViTs on a widely recognized dataset comprising mammogram images. Each architecture is subject to rigorous training and validation processes to assess their accuracy, sensitivity, and specificity in classifying mammograms as benign or malignant. Their findings illustrate that while both models exhibit commendable performance, nuanced differences emerge, particularly under varied conditions prevalent in clinical environments.

One significant aspect of the study is the exploration of transfer learning—a method of reusing a pre-trained model on a new problem. The authors detail how both CNNs and ViTs benefit from transfer learning, as it allows them to leverage existing knowledge from large datasets, alleviating the need for vast labeled datasets in mammography specifically. The implications of this are profound, especially in scenarios where acquiring and annotating medical images can be labor-intensive and costly.

As healthcare continues to digitize, the role of deep learning in interpreting medical images is elevating patient care. Automated classification systems powered by these neural network architectures can speed up the diagnostic process, allowing radiologists to focus their expertise on cases that require more intensive review. The value of rapid, accurate assessments cannot be overstated, particularly in reducing the anxiety that often accompanies waiting for test results.

Moreover, this comparative analysis sheds light on the computational demands of each model. While CNNs are generally less resource-intensive and quicker to train, ViTs may offer greater accuracy despite their need for more computational power and time. The balance between performance and resource allocation becomes a vital consideration for medical institutions, particularly in low-resource settings where every bit of computational efficiency counts.

The authors further discuss the ethical implications of deploying these technologies in clinical practice. As AI models begin to take on greater roles in diagnostic procedures, the onus remains on developers and healthcare providers to ensure that these systems are trained on diverse datasets. Minimizing biases in training data is crucial to avoid disparities in diagnostic accuracy across different demographic groups.

As the medical community grapples with these innovations, the ongoing development of deep learning models represents a paradigm shift in radiological practices. The findings presented by Sharma, Singh, and Choudhury serve to spark conversations about the future of AI in diagnostics and how these advancements can be harnessed to improve patient outcomes. The potential for increased early detection rates and, consequently, improved survival rates from breast cancer is an exciting prospect that warrants further exploration.

In conclusion, the research conducted by Sharma et al. showcases the power of deep learning in revolutionizing mammography classification. The contrasting performances of CNNs and ViTs highlight the importance of ongoing investigations into machine learning frameworks that can bolster diagnostic accuracy. As researchers continue to push these boundaries, the convergence of artificial intelligence and healthcare holds the promise of reshaping the landscape of medical diagnostics for decades to come.

Subject of Research: Deep learning architectures for mammography classification

Article Title: Advanced deep learning architectures for enhanced mammography classification: a comparative study of CNNs and ViT

Article References: Sharma, S., Singh, Y. & Choudhury, T. Advanced deep learning architectures for enhanced mammography classification: a comparative study of CNNs and ViT. Discov Artif Intell 5, 187 (2025). https://doi.org/10.1007/s44163-025-00426-2

Image Credits: AI Generated

DOI: 10.1007/s44163-025-00426-2

Keywords: deep learning, mammography classification, CNNs, Vision Transformers, artificial intelligence.