Gesture recognition has become an increasingly vital component in human-computer interaction, enabling more intuitive and effective communication between machines and users. Leveraging advanced techniques in artificial intelligence and computer vision, researchers are constantly refining gesture recognition methods to improve accuracy, responsiveness, and adaptability to various contexts. A notable advance in this field has been presented in a recent study by Q. Lu, who proposes a novel gesture recognition approach that integrates multimodal inter-frame motion analysis with shared attention weights. This innovative technique not only enhances the system’s ability to recognize gestures but also allows for a more nuanced understanding of user intentions.

The foundation of Lu’s approach lies in the combination of multimodal data sources for gesture recognition. Conventional methods often rely on a single modality, such as visual data from cameras, to interpret gestures. However, this can lead to limitations, especially in complex environments where lighting conditions, occlusions, and diverse backgrounds can hinder performance. By incorporating multiple modalities, Lu’s technique analyzes a broader spectrum of information, including motion tracking and even auditory cues, providing a richer context for interpretation.

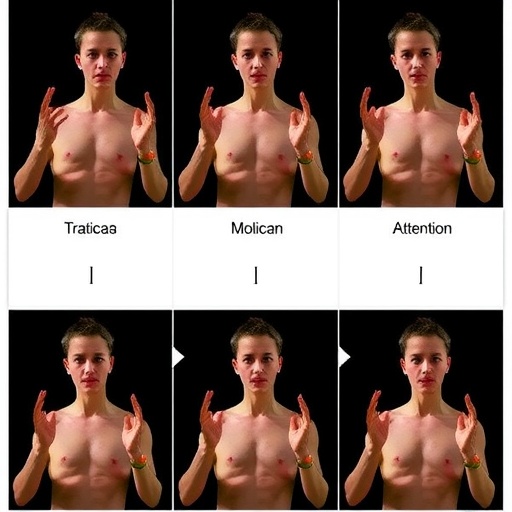

One of the critical aspects of this research is the integration of inter-frame motion analysis. In traditional gesture recognition systems, static frame analysis might suffice, but recognizing dynamic gestures requires a more fluid understanding of how movements evolve over time. Lu’s method continuously tracks the motion across frames, capturing the subtleties and variations that define different gestures. This temporal analysis adds a layer of sophistication that significantly improves recognition accuracy, especially for gestures that occur in quick succession or have slight variations.

Shared attention weights further enhance the model’s processing capabilities. This feature allows the recognition system to prioritize certain elements within the multimodal input, directing its focus toward the most pertinent information relevant to the gesture being analyzed. By dynamically adjusting these weights based on the context, the system can effectively distinguish between gestures that might otherwise appear similar. This adaptability is crucial in creating a more robust and user-friendly gesture recognition experience, particularly in applications such as virtual reality, augmented reality, and assistive technologies.

The implications of Lu’s gesture recognition framework extend far beyond mere accuracy. With a deeper understanding of user intent, systems can become more proactive and responsive, anticipating actions and facilitating smoother interactions. In environments like smart homes or autonomous vehicles, enhanced gesture recognition can lead to more seamless integration of user commands, making technology more accessible and intuitive for everyday tasks.

Moreover, the incorporation of multimodal approaches positions Lu’s research at the forefront of gesture recognition, allowing for a more human-centric design in technology. By focusing on real-world usability and the natural ways humans communicate through gestures, this approach not only improves functional performance but also aligns technology with the nuances of human behavior, bridging the gap between users and machines.

Another vital aspect of this research is its potential impact on accessibility. By refining gesture recognition systems, Lu’s method can enhance the capabilities of assistive technologies for individuals with disabilities. Gesture-based control mechanisms can empower users with limited mobility to interact with their devices effectively, fostering independence and improving quality of life. The advancements in recognizing gestures that may be subtle or unconventional can provide opportunities for greater inclusivity in technology use.

In today’s world, where remote communication is becoming the norm, gesture recognition technology plays a crucial role in enhancing virtual meetings and interactions. Lu’s innovative approach could significantly improve communication clarity and engagement, helping to bridge the physical gap created by distance. By enabling more natural expressions of emotions and reactions, users can communicate more effectively, reducing the misunderstandings often associated with digital interactions.

As the field of artificial intelligence continues to evolve, Lu’s research contributes to a growing body of knowledge aimed at enhancing human-computer interaction. Future advancements may lead to further refinements in gesture recognition, enabling even more personalized and intelligent responses from systems. As we embrace the future of technology, studies like Lu’s highlight the path toward more sophisticated, emotionally aware, and contextually responsive systems.

In conclusion, Q. Lu’s gesture recognition method integrating multimodal inter-frame motion and shared attention weights represents a significant step forward in the realm of human-computer interaction. With enhancements in accuracy and responsiveness, this research has far-reaching implications for various fields, including market technologies, accessibility solutions, and immersive environments. As we move toward a future where technology becomes increasingly integrated into our daily lives, the importance of intuitive gesture recognition will only continue to grow.

The potential for commercial application is immense. From gaming to robotics, the market demand for highly accurate gesture recognition systems that can understand complex human movements and intentions will drive future innovations. Companies investing in these technologies will likely gain a competitive edge as they develop products that seamlessly integrate gesture control into user experiences.

As further research builds upon the principles laid out by Lu, we can expect innovations that harness deep learning, natural language processing, and real-time data analysis to create increasingly sophisticated gesture recognition systems. The future will surely bring exciting developments, paving the way for a more engaging and interactive relationship between humans and machines.

In summary, Lu’s work not only exemplifies cutting-edge research but also sets the stage for future advancements in gesture recognition. As we witness the ongoing convergence of physical and digital worlds, the ability to recognize and respond to human gestures will play a pivotal role in shaping the technologies of tomorrow.

Subject of Research: Gesture recognition methods

Article Title: Gesture recognition method integrating multimodal inter-frame motion and shared attention weights.

Article References:

Lu, Q. Gesture recognition method integrating multimodal inter-frame motion and shared attention weights.

Discov Artif Intell 5, 405 (2025). https://doi.org/10.1007/s44163-025-00653-7

Image Credits: AI Generated

DOI: https://doi.org/10.1007/s44163-025-00653-7

Keywords: Gesture recognition, multimodal analysis, artificial intelligence, user interaction, assistive technology, motion tracking, shared attention weights.