Recent advancements in artificial intelligence have introduced visual language models (VLMs) that demonstrate significant prowess in visual reasoning tasks. These models have notably succeeded in challenges that mirror college-level examinations historically deemed complex, showcasing their ability to interpret and analyze images with a sophisticated understanding typically associated with human cognition. However, beneath this surface-level success lies a troubling realization: some of these VLMs encounter substantial difficulties when confronted with basic elemental visual concepts. These include fundamental attributes such as orientation, position, continuity, and occlusion. The discrepancies between human and VLM performance raise critical questions about the nature of artificial vision and its ability to truly replicate or understand human-like perception.

The crux of the challenge lies in the fact that while VLMs can navigate intricate visual data and make connections that lead to accurate object recognition, they seem to lack a foundational understanding of the underlying visual principles that humans acquire almost instinctively. Interestingly, this limitation hinders their overall effectiveness, particularly in scenarios where nuanced visual reasoning is paramount. Despite their high-level capabilities, these models display inconsistencies that suggest they fall short of fully emulating the way humans process visual information.

To address this gap in understanding, researchers have recognized the necessity for systematic evaluations that juxtapose human abilities against those exhibited by VLMs. The study of neuropsychology provides a rich toolkit for this comparison, allowing scientists to deploy assessments that have been clinically validated and are designed to measure various visual competencies. By focusing on these well-established psychological test batteries, researchers are now able to delineate the specific strengths and weaknesses of leading VLMs when placed under the scrutiny of principled experimentation.

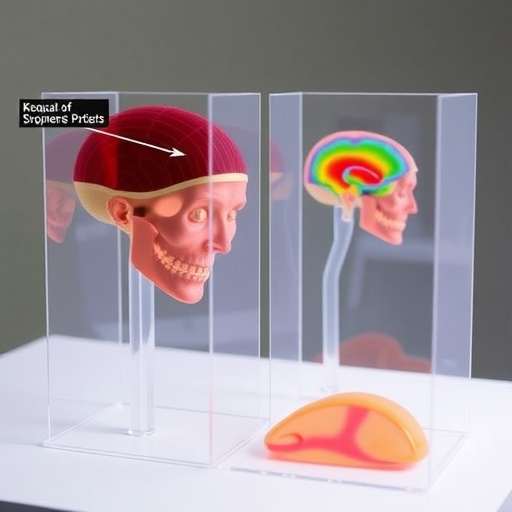

A compelling study undertook this very evaluation, analyzing three state-of-the-art VLMs through the lens of 51 different tests derived from six prominent psychology batteries. The approach allowed researchers to comprehensively characterize the visual capabilities of these models, placing their performance against normative standards established within healthy adult populations. What emerged from this intricate analysis was illuminating: while VLMs performed admirably in straightforward object recognition tasks, the exploration of lower-tier visual abilities revealed significant deficits that could be clinically classified as notable impairments within human frameworks.

These deficits are not mere trifles; they echo back to foundational skills that humans typically hone without any dedicated training. For instance, concepts such as spatial orientation, the ability to perceive continuity in visual stimuli, or understanding the effects of occlusion—where an object is partially hidden—are elements of visual perception that humans grasp easily through natural development. Conversely, VLMs appear to struggle with these concepts, indicating a profound disconnect between artificial systems and innate human understanding.

One might question how this conclusion has implications for the future of artificial intelligence and its integration into society. As VLMs become embedded within various applications, including autonomous systems, healthcare diagnostics, and educational technologies, understanding their limitations is critical to ensuring that they complement rather than compromise human capabilities. If these models are implemented without addressing their perceptual gaps, it could lead to decisions based on misunderstanding or misrepresentation of visual contexts, creating potential risks in sensitive domains.

Moreover, these findings ignite a re-evaluation of how we design and structure future VLMs. If the goal is for artificial models to mirror human-like capabilities in visual reasoning, developers must focus not only on sophisticated algorithms capable of processing complex images but also on embedding an understanding of fundamental visual principles. This dual approach could point the way forward, bridging the conceptual divide between sophisticated recognition capabilities and the foundational visual understanding that humans acquire naturally.

To comprehend the true potential of artificial intelligence, we must extend our focus beyond performance metrics in isolation. Evaluating these systems within a framework informed by neuropsychological understanding highlights the complexity inherent in visual processing and offers clues for future exploration. By adopting an interdisciplinary perspective, combining insights from psychology with advancements in AI, we can better inform the development of VLMs that do more than just mimic human behavior; they can aspire to achieve a deeper, more nuanced understanding of the visual world.

As VLM technology continues to evolve, the insights from this research will be vital for guiding the next generation of models. The conversation surrounding AI and visual processing is only gaining momentum. Nevertheless, for those interested in the intersection of artificial intelligence and cognitive psychology, the findings present a clarion call to consider the deficits in performance as a constructive pathway forward. Significantly, addressing these foundational visual concepts could unlock a new realm of possibilities, allowing artificial systems to interact with their environments in a manner that feels more intuitive and human-like.

Ultimately, the investigation into VLM capabilities invites a broader dialogue around the ethical implications of deploying AI systems across various fields. Understanding the limitations—particularly those tied to basic visual reasoning—could empower developers and stakeholders to make informed decisions about the roles these systems should play. Ensuring human oversight, especially in high-stakes environments, becomes paramount in upholding not only effective but also responsible uses of these advanced technologies. The challenge of bridging the gap between VLM performance and human perception will undoubtedly shape the future of AI research and application.

In conclusion, as the veil is lifted on the capabilities and limitations of visual language models, we arrive at a moment ripe for reflection and action. The work of assessing VLMs through the lens of neuropsychology reveals profound insights into our understanding of artificial visual perception. It not only challenges the narrative of current AI superiority in visual reasoning but also sets a course for more complex, integrative AI systems that can aspire to a holistic approach—one that merges recognition capabilities with an innate understanding of the world they navigate. As we contemplate the future of artificial intelligence, the lessons learned from these explorations will shape the trajectory of innovations yet to come.

Subject of Research: Visual language models’ performance in visual reasoning tasks compared to human visual perception.

Article Title: Visual language models show widespread visual deficits on neuropsychological tests.

Article References: Tangtartharakul, G., Storrs, K.R. Visual language models show widespread visual deficits on neuropsychological tests. Nat Mach Intell (2026). https://doi.org/10.1038/s42256-026-01179-y

Image Credits: AI Generated

DOI: https://doi.org/10.1038/s42256-026-01179-y

Keywords: Visual language models, artificial intelligence, visual reasoning, neuropsychology, human perception, cognitive psychology.