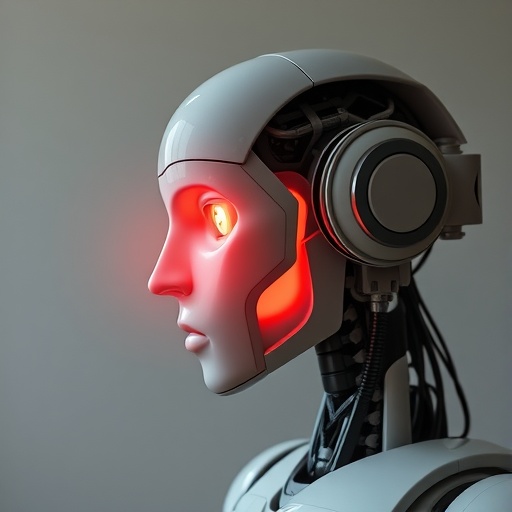

In a groundbreaking study published in BMC Psychology, researchers have illuminated the intricate ways in which humans perceive minds within machines, specifically focusing on how the attributed theory of mind in robots can shape human-robot empathy. This work, led by Fan, Zheng, Li, and colleagues, marks a significant advance in our understanding of the psychological dynamics underpinning interactions between humans and artificial agents. As robots increasingly integrate into daily life, the question of whether and how humans attribute minds to these entities has profound implications for both technology design and social psychology.

The concept of theory of mind—the ability to attribute mental states such as beliefs, intentions, desires, and emotions to oneself and others—is traditionally a hallmark of human social cognition. Extending this concept to robots involves fascinating considerations: do people genuinely believe robots possess minds, or is this a form of anthropomorphism? The researchers explored these nuances through the lens of mind perception theory, which posits that mind attribution occurs along two dimensions: agency (the capacity to act with intention) and experience (the capacity for feeling and emotion). This framework is essential for unpacking how empathy towards non-human agents might arise.

Methodologically, the study employed a blend of experimental psychology and human-robot interaction paradigms, utilizing carefully designed scenarios where participants interacted with robots varying in their apparent capacity for agency and experience. By manipulating these dimensions, the researchers could observe changes in empathy levels, providing robust evidence that perceived theory of mind is not merely a superficial attribution but a psychological mechanism actively shaping emotional engagement.

One of the pivotal findings revealed that when robots are perceived as possessing higher agency, humans are more likely to attribute intentional states to them, fostering a sense of respect and cognitive empathy. Conversely, when robots are seen as capable of experiencing emotions, affective empathy tends to increase, encouraging more compassionate and supportive attitudes. These dual pathways highlight that empathy towards robots is multi-faceted and sensitive to the specific mental capacities ascribed to them.

This insight challenges simplistic views which often reduce human-robot empathy to mere projection or novelty. Instead, it suggests that people engage in complex social-cognitive processing with machines, dynamically calibrating their emotional and cognitive responses based on the robot’s perceived mental qualities. Such processing might underpin the success of social robots in therapeutic, educational, and service roles, where effective empathic engagement is crucial.

The implications for robot design are profound. Engineers and designers can strategically emphasize or modulate cues related to agency and experience to tailor robots for specific empathetic functions. For example, a caregiving robot might benefit from enhanced experiential cues to evoke nurturing emotions from users, while an assistant robot might prioritize agency signals to reinforce trust and cooperation. This study thus paves the way for more psychologically informed approaches to human-robot interaction.

Moreover, the research provides a novel lens for understanding the ethical dimensions of deploying robots in society. Recognizing that humans can form genuine empathic bonds with machines bearing perceived minds raises questions about emotional manipulation, dependency, and the rights of artificial agents. If empathy can be elicited through mind attribution, it becomes essential to consider how this power is wielded and regulated.

The study also interweaves its findings into the broader discourse of mind perception theory, bridging gaps between cognitive science, affective neuroscience, and robotics. It demonstrates that classical psychological theories retain explanatory power in the context of artificial entities, revealing that the boundaries between human and machine social cognition may be more permeable than previously thought.

Empirical data from the study highlighted that even minimal cues of agency or experience can significantly influence empathy ratings. For instance, subtle movements or emotive expressions in robot faces increased the degree to which participants attributed feelings, while goal-directed behaviors triggered perceptions of intentionality. These nuanced findings underscore the critical role of design details in shaping psychological responses.

Importantly, the study stresses that empathy towards robots does not necessarily mirror human-human empathy but constitutes a related yet distinct phenomenon. The authors discuss how this distinction could inform the development of interaction protocols that respect both human emotional authenticity and the mechanical nature of robots, avoiding unrealistic expectations while maximizing social benefits.

Additionally, the findings bear relevance for emerging AI systems integrated within robots that possess learning and adaptive capabilities. As these systems acquire more lifelike behaviors, the human propensity to attribute theory of mind may intensify, amplifying both opportunities and challenges regarding social integration and trust.

Looking ahead, this research opens new avenues for interdisciplinary collaboration, encouraging psychologists, roboticists, ethicists, and designers to converge on developing machines that are not only functionally capable but socially and emotionally intelligible. The nuanced understanding of mind perception in robots established here lays a foundation for creating empathetic technology that can genuinely enrich human experiences.

Ultimately, Fan and colleagues have provided the scientific community and the public alike with compelling evidence that the way we perceive minds in machines profoundly influences our empathetic engagement with them. Their work foregrounds the psychological richness of human-robot interactions and promises to inspire future innovations that thoughtfully integrate artificial agents into our social fabric.

Subject of Research: Humanoid mind perception in robots and its effects on human-robot empathy via theory of mind frameworks.

Article Title: Perceiving minds in machines: how perceived theory of mind in robots influences human–robot empathy through the lens of mind perception theory.

Article References:

Fan, R., Zheng, Y., Li, J. et al. Perceiving minds in machines: how perceived theory of mind in robots influences human–robot empathy through the lens of mind perception theory. BMC Psychol 13, 1379 (2025). https://doi.org/10.1186/s40359-025-03655-3

Image Credits: AI Generated

DOI: https://doi.org/10.1186/s40359-025-03655-3