In a groundbreaking advancement poised to redefine the landscape of autonomous navigation, a research team from Southwest Jiaotong University has unveiled a novel framework that significantly enhances zero-shot object navigation capabilities in embodied AI agents. Published on January 15, 2026, in the esteemed journal Frontiers of Computer Science, their pioneering approach — termed the Bidirectional Chain-of-Thought (BiCoT) framework — represents an innovative leap forward in how artificial agents conceptualize and traverse unfamiliar environments without the need for prior task-specific training.

Zero-shot object navigation presents one of the toughest challenges in robotics and AI, demanding agents to locate specific targets within environments they have never encountered before. Unlike traditional machine learning models which rely heavily on extensive training data and often falter when faced with novel scenes, zero-shot methods strive for generalization and adaptability. Existing zero-shot navigation techniques predominantly focus on the target perspective alone, which can severely limit an agent’s contextual understanding of the environment and reduce navigational efficiency.

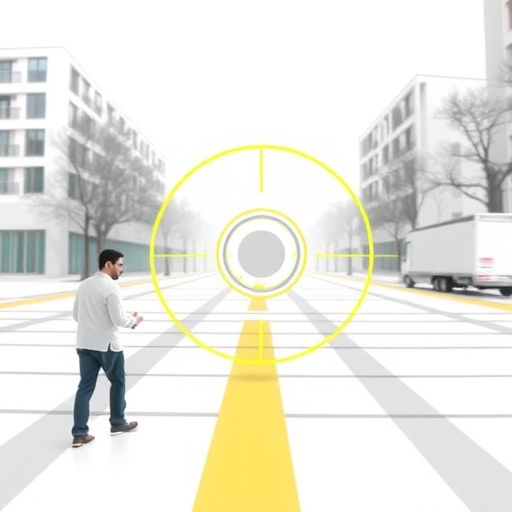

Addressing these limitations, the BiCoT framework introduces an ingenious bidirectional reasoning mechanism. The system constructs two distinct yet interconnected Chain-of-Thought (CoT) graphs to facilitate a more holistic navigation strategy. The first graph extrapolates probable objects situated in proximity to the target, essentially generating a conceptual map of the target’s immediate surroundings based on semantic reasoning. Simultaneously, the second graph actively detects and integrates objects within the agent’s current field of view, grounding navigation decisions in real-time perceptual data.

What sets BiCoT apart is its sophisticated use of large language models to evaluate and compute the relevance between these two CoT graphs. By quantifying the semantic and contextual correlations, the agent can prioritize pathways and exploration areas that bear the highest probabilistic relevance to the target, thereby optimizing both search efficiency and success rates. This two-pronged strategy allows the AI to simulate a form of bi-directional reasoning—essentially reasoning about where the target might be from both ends of the search process, rather than unidirectionally from the agent’s viewpoint alone.

The team rigorously tested the BiCoT framework on two widely recognized benchmarks for embodied navigation research: Matterport3D (MP3D) and Habitat-Matterport3D (HM3D). These datasets present highly complex and diverse indoor environments, posing significant challenges for autonomous agents. Experimental results highlighted that BiCoT achieved a substantial increase in success rate (SR) and navigational efficiency (SPL) over prevailing zero-shot methods. Specifically, performance improvements exceeded 3.0% on MP3D and an impressive 5.5% on the more demanding HM3D benchmarks, underscoring the framework’s robust ability to generalize across varied spatial contexts.

Beyond raw performance metrics, the BiCoT approach offers deeper insights into how integrated cognitive mapping and semantic reasoning can empower embodied AI systems. Its dual-graph reasoning mechanism reflects an emerging paradigm in robotics where abstract, language-driven thought processes synergize with sensor-grounded environmental awareness. This duality is crucial for real-world deployment scenarios, where unpredictable layouts and dynamic settings require agents to constantly reconcile prior semantic expectations with new perceptual inputs.

The successful demonstration of BiCoT’s effectiveness paves the way for exciting future research avenues. The team envisions refining the object-reasoning process to incorporate more nuanced hierarchical relationships between detected objects, targeting richer semantic context and improved predictive capabilities. Additionally, there is a strong impetus to explore multi-modal learning integrations, potentially combining visual, linguistic, and spatial data streams, to develop agents capable of even more sophisticated environment understanding and adaptive navigation.

As autonomous systems continue to permeate our daily lives—from domestic robots and delivery drones to search-and-rescue units—the ability to navigate unfamiliar spaces efficiently and reliably becomes paramount. The BiCoT framework’s contributions thus represent not only academic milestones but also critical building blocks toward AI agents that can seamlessly operate in the chaotic complexity of the real world.

Moreover, this research highlights the growing role of large language models beyond traditional natural language processing domains. Their application in evaluating semantic relevance between graphical representations of spatial knowledge introduces a new interdisciplinary nexus between language understanding and robotic cognition, which promises to yield transformative results in AI research.

The implications of this study reach far beyond object navigation. By leveraging bidirectional reasoning mechanisms grounded in chain-of-thought architectures, future embodied agents could also excel in tasks requiring complex decision-making, problem-solving, and dynamic interaction in unstructured environments, thereby accelerating AI’s integration into multifaceted societal roles.

In summary, the Bidirectional Chain-of-Thought framework from Southwest Jiaotong University sets a new standard in zero-shot object navigation by empowering agents with a richer cognitive toolkit that bridges perception and semantic reasoning. Its success across challenging benchmarks endorses this innovative methodology as a cornerstone for future intelligent robotics endeavors, marking a significant stride toward truly autonomous AI navigation in the wild.

Subject of Research: Not applicable

Article Title: Bidirectional chain-of-thought for zero-shot object navigation

News Publication Date: 15-Jan-2026

Web References: 10.1007/s11704-025-41283-7

Keywords: Computer science, zero-shot learning, embodied AI, object navigation, large language models, chain-of-thought reasoning, bidirectional reasoning, autonomous navigation, semantic mapping, multi-modal learning, AI cognition, robotics