In the rapidly evolving landscape of urban studies, a groundbreaking approach is emerging that bridges the gap between physical cityscapes and the emotional experiences of their inhabitants. Researchers at the University of Missouri, led by assistant professor of architectural studies Jayedi Aman, in collaboration with geography and engineering professor Tim Matisziw, have pioneered an innovative methodology utilizing artificial intelligence to capture and map the sentiments of city dwellers as they navigate urban environments. Their work goes beyond traditional architectural and planning frameworks by embedding human emotions into the very fabric of urban design and management.

Aman and Matisziw’s research tackles a fundamental challenge: understanding how people emotionally interact with the places they live in and move through daily. Cities have always been defined by their physical features—buildings, roads, parks—but the subjective feelings generated by these elements have remained elusive in quantitative analysis. To address this, the team developed an AI-driven system that mines publicly shared social media content, specifically Instagram posts tagged with precise locations, to extract emotional cues. This novel synthesis of natural language processing and computer vision allows for an unprecedented glimpse into the collective urban psyche.

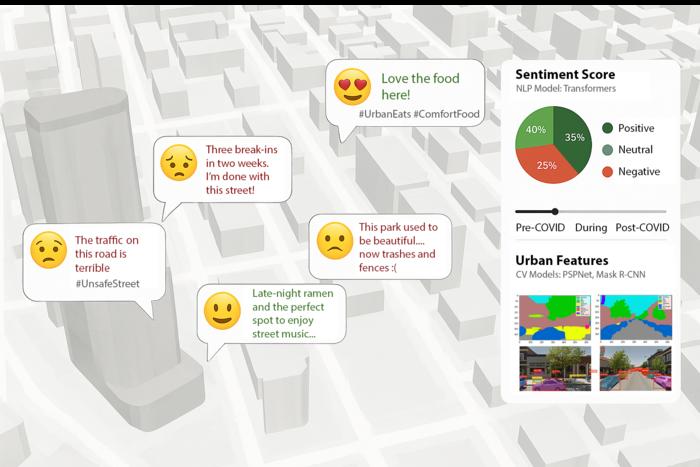

The foundation of their sentiment mapping rests on machine learning algorithms trained to interpret both the textual and visual components of social media posts. This dual-modality approach enables the extraction of nuanced emotional states—ranging from happiness and relaxation to frustration and discomfort. The AI tool parses user-generated captions and comments with advanced language models, discerning sentiment polarity and categories. Simultaneously, it analyzes images through deep learning-driven vision models to corroborate and enrich the emotional context. This comprehensive sentiment analysis transcends the limitations of traditional surveys by leveraging real-time, location-specific data voluntarily shared by millions of people.

Critically, Aman and Matisziw integrated their sentiment data with spatial analysis powered by Google Street View imagery, employing a second AI framework to characterize the physical attributes of the urban landscape associated with the sentiment hotspots. This image-processing system assesses environmental features such as greenery, building density, street furniture, and lighting conditions to identify patterns that correlate with distinct emotional responses. By linking emotional data with physical environments, the researchers constructed detailed “sentiment maps” that visually represent how different city areas make residents feel.

The implications of creating such a digital emotional atlas are profound. City officials, urban planners, and community advocates gain access to dynamic, data-driven insights into the well-being and perceptions of city inhabitants. Traditional means of gauging public sentiment—such as opinion polls or feedback sessions—are often time-consuming, costly, and suffer from sampling biases. In stark contrast, this AI-powered mapping taps into organic, abundant data sources, allowing for near real-time monitoring of urban sentiment fluctuations. This approach democratizes feedback by encompassing individuals who might otherwise be underrepresented in official channels.

Building upon these findings, Aman and Matisziw envision the development of an “urban digital twin”—a sophisticated virtual replica of the city that incorporates real-time emotional data. Such a digital twin would empower municipal leaders to visualize where positive or negative sentiments cluster, offering actionable insights for targeted interventions. For instance, if social media analysis reveals a surge of positive posts around a newly developed park, planners can dissect the specific environmental features driving this uplift, be it lush vegetation, recreational facilities, or community events. Conversely, areas marked by apprehension or dissatisfaction can be flagged for safety audits, infrastructure upgrades, or community engagement initiatives.

Beyond improving urban design, emotional mapping holds promise for enhancing public safety and disaster response. By identifying zones where residents frequently express feelings of insecurity or distress, emergency services can prioritize resources and preventive measures. After natural disasters or major incidents, sentiment data can gauge the psychological impact on communities, informing recovery strategies and mental health support deployment. Thus, emotional data becomes a critical layer in comprehensive urban resilience planning.

Importantly, the researchers emphasize that artificial intelligence supplements rather than replaces human judgment and expertise. Matisziw highlights that AI’s strength lies in identifying subtle trends and latent patterns that might elude traditional analysis, thereby presenting a powerful complement to policymakers. The integration of computational social science with human geography and architectural studies exemplifies how interdisciplinary approaches can plasticize urban planning into a more empathetic and responsive science.

Looking ahead, the incorporation of subjective sentiment data alongside conventional urban indicators like traffic flow, air quality, and weather conditions heralds a new era in smart city development. City dashboards enriched with emotional analytics could enable officials to make informed decisions that resonate with the lived experiences of residents. This holistic perspective supports urban environments that not only function efficiently but also nurture psychological well-being, fostering a stronger sense of community and belonging.

This pioneering work was recently detailed in the article titled “Urban sentiment mapping using language and vision models in spatial analysis,” published in Frontiers in Computer Science. The publication, dated March 13, 2025, thoroughly outlines the technical underpinnings, including the machine learning models deployed for sentiment classification, image feature extraction techniques, and the integration of multimodal data within spatial frameworks. It underscores the potential for scalable, adaptable systems applicable to diverse urban contexts worldwide.

The research further advances computational methodologies in artificial intelligence by combining natural language processing, sentiment analysis, and computer vision in a geospatial setting. The use of Instagram’s voluminous data troves exemplifies innovative digital data mining for social research applications. Through careful methodical design, including validation against traditional survey responses and ground truthing, the study ensures robust, reliable interpretation of emotional signals in urban spaces.

Ultimately, this AI-powered emotional mapping initiative marks a paradigm shift in how cities understand their residents—not as mere inhabitants coexisting with infrastructure but as emotional beings whose feelings are integral to urban vitality. By unlocking the expressive data embedded in social media, Aman, Matisziw, and their team are laying the groundwork for cities that are emotionally intelligent, adaptive, and genuinely human-centered in their evolution.

Subject of Research: Urban Sentiment Analysis, AI-driven Emotional Mapping, Spatial Analysis, Urban Digital Twins

Article Title: Urban sentiment mapping using language and vision models in spatial analysis

News Publication Date: 13-Mar-2025

Web References: https://www.frontiersin.org/journals/computer-science/articles/10.3389/fcomp.2025.1504523/full

References: DOI 10.3389/fcomp.2025.1504523

Image Credits: Photo courtesy Jayedi Aman

Keywords: Artificial intelligence, Sentiment analysis, Urban studies, Computer vision, Natural language processing, Spatial analysis, Urban planning, Digital twins, Machine learning, Emotional mapping, Public opinion, Human geography