When Large Language Models Meet Evolutionary Algorithms: Unlocking New Frontiers in Artificial Intelligence

In the rapidly evolving landscape of artificial intelligence, two distinct yet powerful paradigms—Large Language Models (LLMs) and Evolutionary Algorithms (EAs)—have independently revolutionized their respective domains. Recently, researchers have begun to unravel the intricate conceptual parallels between these methodologies, unveiling promising avenues for hybridizing their strengths to push AI capabilities beyond current limits. This convergence points toward the development of more adaptive, versatile, and intelligent systems that can learn from massive datasets while exploring novel solutions in complex problem spaces.

Large Language Models such as OpenAI’s GPT series and Google’s PaLM operate on the foundational Transformer architecture, which relies heavily on self-attention mechanisms to decode the relationships between tokens in textual data. This allows LLMs to build sophisticated conditional probability distributions, predicting the next token in a sequence with remarkable contextual awareness. These models excel in tasks ranging from natural language understanding to generation, demonstrating emergent capabilities in disciplines as varied as creative writing, mathematical reasoning, and molecular design. Their main strength lies in absorbing statistical regularities from vast corpora, effectively distilling human knowledge in a form that can be queried and extended.

Conversely, Evolutionary Algorithms are rooted in the biological concept of evolution, utilizing iterative processes that mimic natural selection, mutation, and reproduction. EAs excel at navigating rugged fitness landscapes—black-box environments where objective functions are complex or unknown. By evolving populations of candidate solutions over successive generations, they discover optimized or diversified outcomes for tasks such as hyperparameter tuning in machine learning, neural architecture search, and robotics control. Their stochastic and exploratory nature contrasts with the largely deterministic and data-driven learning of LLMs, making their combination particularly compelling.

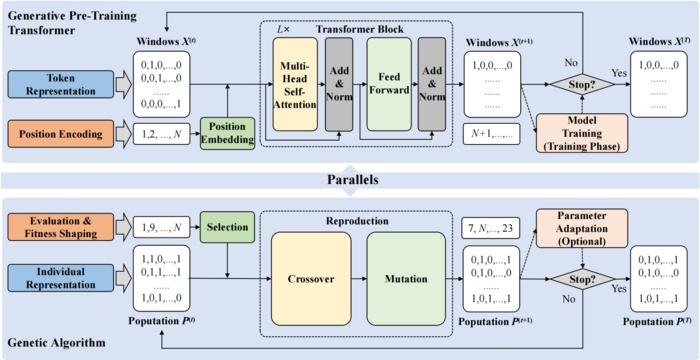

The research team led by Professor Jiao Licheng at Xidian University has made strides in systematically dissecting the underlying parallels between these two paradigms. On a micro-level basis, their investigation identifies five key conceptual correspondences that can serve as foundational pillars for integrative AI system design. These include mapping token representation in LLMs to individual representation in EAs, equating position encoding with fitness shaping, and aligning position embedding mechanisms with selection processes. Further, they draw analogies between the core architectural design of Transformers and the reproduction operators in evolutionary frameworks, as well as between model training procedures and parameter adaptation strategies within evolutionary contexts.

Expanding beyond these micro-level insights, the team also explores macro-level interdisciplinary directions that blend LLMs and EAs into hybrid frameworks. One of the foremost applications involves evolutionary fine-tuning under black-box conditions, especially in “model-as-a-service” scenarios. Many commercial LLMs are provided exclusively through APIs, allowing only inference calls but withholding gradient or internal state information. In such environments, classical gradient-based optimizations are infeasible. Evolutionary Algorithms, free from the need for gradient signals, become invaluable for systematically optimizing prompts or inputs to these LLMs, adapting them through forward-only evaluation. This synergy leverages the black-box-friendly properties of EAs alongside the rich representational power of LLMs.

In another promising direction, researchers harness LLMs themselves as integral evolutionary operators. By representing populations in natural language, LLMs can serve as advanced reproduction and mutation mechanisms within EA pipelines. These language-driven evolutionary operators introduce nuanced variability and semantic coherence to the candidate solutions, fostering diversity and accelerating convergence. Such hybrid approaches have been employed to tackle real-world optimization challenges that are resistant to conventional algorithmic solutions, pointing toward LLM-enabled evolutionary operators as a transformative paradigm.

The reciprocal influence between LLMs and EAs indicates a broader conceptual framework for the future of artificial intelligence agents. Unlike standalone systems that either rely on static knowledge or purely stochastic exploration, these hybrid agents aspire to continually learn from accumulated data while dynamically probing new solution spaces. This integrated model aligns more closely with biological intelligence, which merges memory and adaptability in a seamless loop. It could thus unleash AI systems capable of ongoing self-improvement and open-ended discovery, surpassing present capabilities restricted by narrow specialization.

Despite the promising prospects detailed in the research, achieving a unified theoretical paradigm in which every key feature of LLMs corresponds precisely to an equivalent concept in evolutionary algorithms remains an open challenge. The aim is not to mathematically prove equivalence or transform one framework fully into the other but rather to draw metaphorical and technical parallels that inspire innovative modeling and algorithmic synthesis. In doing so, researchers can identify unexplored design patterns and accelerate the creation of novel architectures that incorporate the best of both worlds.

Technological progress in computational power and algorithmic sophistication will be crucial enablers of this convergence. High-performance parallel computing enables the scaling of LLM training across billions of parameters, while modern evolutionary algorithms capitalize on efficient population-based search strategies and adaptive mutation schemes. Integrated systems that tightly couple these capabilities may require new software frameworks, optimization protocols, and evaluation benchmarks that reflect hybrid learning and exploration goals.

Moreover, the societal applications of such convergent AI systems are vast. In scientific research, they could radically improve the automated generation and testing of hypotheses in domains such as drug discovery and materials science. In engineering, they may unlock adaptive control strategies and real-time optimization in complex, uncertain environments. The combination of LLM-driven semantic understanding with evolutionary optimization could revolutionize optimization workflows in business analytics, personalized education, and beyond.

Ethical considerations also accompany this technological advance. As AI agents evolve higher degrees of autonomy and emergent behavior, researchers and policymakers must address issues around transparency, accountability, and fairness. Understanding the decision-making processes of these hybrid systems will demand new interpretability tools that marry symbolic reasoning with stochastic evolution. Responsible innovation will be pivotal to securing public trust and maximizing societal benefits.

In conclusion, the pioneering research by Professor Jiao Licheng’s team marks an important step toward harmonizing two of AI’s most influential paradigms. Large Language Models and Evolutionary Algorithms possess complementary strengths—data-driven learning and exploratory adaptation—that together hold the promise of fundamentally enhancing artificial intelligence’s scope and impact. While the journey toward a fully integrated, unified framework continues, these conceptual parallels provide fertile ground for breakthroughs that may redefine how machines simulate intelligence, creativity, and resilience in the years to come.

Subject of Research: Not applicable

Article Title: When Large Language Models Meet Evolutionary Algorithms: Potential Enhancements and Challenges

News Publication Date: 27-Mar-2025

References: DOI 10.34133/research.0646

Image Credits: Copyright © 2025 Chao Wang et al.