In the continuously evolving landscape of medical imaging and artificial intelligence, a groundbreaking study has emerged that promises to revolutionize tumor recognition using computed tomography (CT) scans. Published in Nature Communications, this research delves into the innovative use of large-scale generative models to synthesize tumor images, amplifying the potential for more accurate and reliable diagnostic tools. By harnessing the power of generative technology, scientists aim to bridge the gap between limited annotated datasets and the growing demand for precise tumor identification in clinical settings.

Tumor recognition in CT images has long posed challenges due to the intrinsic complexity and variability of tumor appearances. Traditional machine learning models often depend heavily on vast, meticulously labeled datasets, which are costly and time-consuming to acquire in medical contexts. Moreover, the scarcity of diverse tumor samples hinders the generalizability of these models across different patient populations and tumor types. Addressing these issues, the study pioneers a large-scale generative approach that creates realistic synthetic tumor images, effectively augmenting existing datasets and boosting the performance of tumor detection algorithms.

At the heart of this transformative research lies the deployment of advanced generative adversarial networks (GANs) tailored for CT imagery. These networks consist of two primary components: a generator capable of producing high-fidelity synthetic images and a discriminator trained to distinguish between real and generated images. Through an adversarial training process, the system iteratively refines the quality of synthetic tumors, ensuring they closely mimic real-world complexities both in texture and structural heterogeneity. This level of realism is pivotal for training robust tumor recognition frameworks that can adapt seamlessly to variations in tumor morphology.

The methodological innovations presented extend beyond mere image generation. The researchers incorporated multi-scale learning mechanisms within the GAN architecture, enabling it to capture details ranging from macro-level anatomical structures to micro-scale tumor features. This hierarchical approach enhances the representational richness of synthesized images, addressing prior limitations where synthetic tumors lacked fine-grained pathological details essential for clinical relevance. By integrating these features, the generatively augmented data effectively contributes to higher diagnostic accuracy when used to train convolutional neural networks tasked with tumor detection.

An intriguing aspect of the study is how synthetic tumor data interplays with clinical datasets. Rather than replacing real patient data, the study combines both real and synthetic images to create hybrid datasets, leveraging their complementary strengths. This strategy mitigates issues such as data imbalance prevalent in clinical datasets, where rare tumor types are underrepresented. The enriched dataset diversity facilitates more comprehensive model training, improving sensitivity and specificity in tumor detection tasks across a spectrum of malignancies, including those notoriously difficult to identify early on.

Quantitative assessments conducted in the research highlight dramatic improvements in tumor recognition performance metrics. Models trained on the augmented datasets demonstrate marked enhancements in precision and recall rates compared to those trained solely on real images. The robustness of these models was further validated on independent test sets, showcasing improved generalization ability crucial for deployment in varied clinical environments. Such advances underscore the significant role synthetic data can play in overcoming persistent hurdles in medical image analysis.

Beyond empirical validation, the study explores the practical deployment of these generative models within clinical workflows. The synthesized images could serve as training datasets for radiologists and machine learning algorithms alike, offering a low-cost and readily scalable alternative to extensive manual annotation. Moreover, the capacity to generate diverse tumor presentations enables simulation of rare or complex cases, a valuable resource for educational purposes and algorithmic fine-tuning. This cross-disciplinary applicability highlights the transformative potential of generative synthesis beyond automated tumor recognition alone.

Ethical considerations and regulatory compliance remain paramount when integrating synthetic data into medical applications. The researchers address concerns about potential biases introduced by artificial images by implementing rigorous validation protocols and ensuring transparency in data synthesis processes. The study’s framework adheres to stringent data privacy standards, as no personal health information is directly utilized in generating synthetic tumors. These safeguards bolster confidence in the clinical acceptability and ethical use of generative technologies within healthcare.

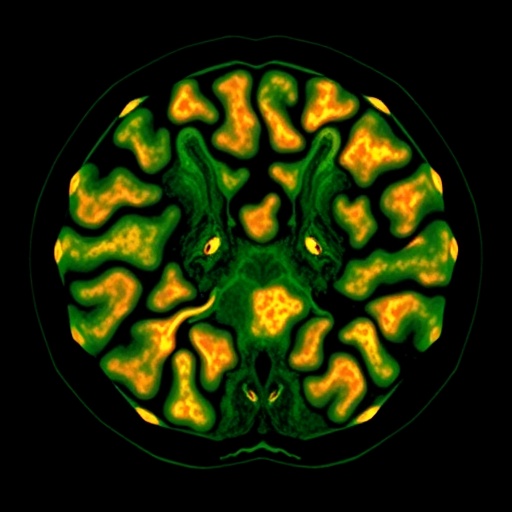

The broader implications of this research extend to other imaging modalities and disease areas. While CT scans and tumor detection form the immediate focus, the underlying generative methodologies are adaptable to modalities such as magnetic resonance imaging (MRI) and positron emission tomography (PET). Additionally, diseases with imaging-dependent diagnostics, like neurological disorders and cardiovascular conditions, could benefit from analogous synthetic data augmentation approaches. This versatility paves the way for a new paradigm in medical imaging research where generative models complement and enhance traditional data-driven techniques.

Importantly, the scalable infrastructure developed for synthetic tumor generation proffers a template for future investigations into data augmentation in medicine. The framework supports high-throughput generation of annotated images, accelerating the pace of research and development in medical AI. This capacity to produce diverse, realistic training data at scale has the potential to democratize access to powerful diagnostic tools, particularly in resource-limited settings where collecting large annotated datasets is challenging or infeasible.

The integration of generative tumor synthesis with state-of-the-art tumor recognition algorithms exemplifies the synergy between artificial intelligence subfields. By combining generative modeling with discriminative classifiers, the study advances beyond isolated methodologies towards comprehensive, integrated AI systems. This holistic approach reflects a maturing field where different AI capabilities coalesce to tackle complex clinical problems, ultimately enhancing patient outcomes through improved diagnostic precision and early detection capabilities.

Collaboration between multidisciplinary teams was crucial to achieving the reported advancements. The study underscores the importance of combining expertise in medical imaging, oncology, machine learning, and clinical practice. Such collaboration ensures that developed algorithms are not only technically robust but are also clinically meaningful and aligned with real-world diagnostic challenges faced by radiologists. This integrated research paradigm fosters the translation of technological breakthroughs into practical healthcare solutions.

As exciting as these developments are, challenges remain on the path to widespread clinical adoption. Future work needs to address longitudinal validations across diverse patient cohorts, integration with electronic health record systems, and optimization for real-time clinical decision support. Additionally, continuous monitoring for potential model drift and ensuring adaptability to evolving clinical guidelines are essential for maintaining efficacy. Nevertheless, the foundation laid by this research marks a significant step forward in harnessing AI to augment human expertise in cancer diagnosis.

In conclusion, the innovative use of large-scale generative models to synthesize tumor images represents a paradigm shift in the domain of medical image analysis. By generating high-quality synthetic tumors that enhance training datasets, the study significantly improves tumor recognition in CT imaging. This advancement has profound implications for early detection, personalized cancer treatment strategies, and ultimately, patient survival rates. As AI-driven methodologies continue to evolve, such research exemplifies the promising future where technology empowers clinicians to achieve new heights in diagnostic accuracy.

This research not only establishes a new benchmark for data augmentation in medical imaging but also opens avenues for further interdisciplinary innovation. The fusion of generative modeling with clinical oncology heralds a new era where synthetic data is a pivotal asset in combating complex diseases. As these technologies mature, their role in shaping the future of healthcare diagnostics and personalized medicine will undoubtedly grow, underscoring the transformative power of artificial intelligence in medicine.

Subject of Research: Generative tumor synthesis using computed tomography for enhanced tumor recognition

Article Title: Large-scale generative tumor synthesis in computed tomography images for improving tumor recognition

Article References:

Wu, L., Zhuang, J., Zhou, Y. et al. Large-scale generative tumor synthesis in computed tomography images for improving tumor recognition. Nat Commun 16, 11053 (2025). https://doi.org/10.1038/s41467-025-66071-6

Image Credits: AI Generated