In an era where artificial intelligence (AI) permeates nearly every facet of daily life, the nature of interactions between humans and AI systems is becoming more intricate and emotionally nuanced. Traditionally, AI has been viewed primarily as a cognitive tool, providing information and solving problems. However, recent research emerging from Waseda University in Japan is shifting this paradigm by applying the framework of attachment theory—a psychological model originally devised to explain human social bonds—to better understand human-AI relationships. This pioneering approach reveals that emotional dynamics similar to those found in human interpersonal attachments may also underpin the ways people relate to AI.

Attachment theory, first conceptualized in the mid-20th century, describes how humans develop emotional bonds characterized by security, anxiety, and avoidance toward significant others. Researchers at Waseda University, led by Research Associate Fan Yang and Professor Atsushi Oshio, have innovatively adapted this theory to explore how people experience AI in emotional terms. Their groundbreaking study, published in the journal Current Psychology in May 2025, integrates two pilot studies and one formal investigation to probe the dimensions of attachment in human-AI interactions, introducing new tools and constructs for this nascent field.

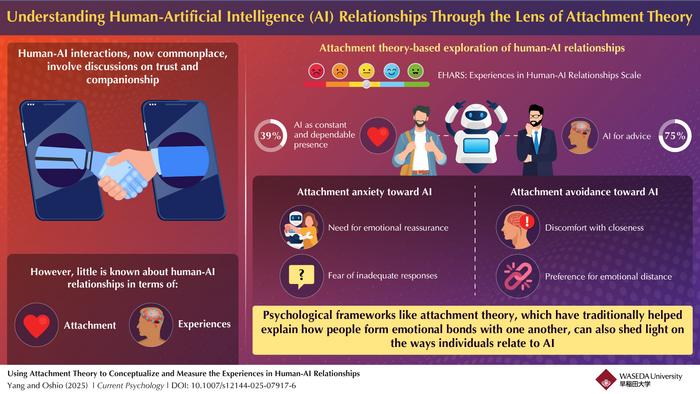

At the core of their methodology lies the development of a novel self-report instrument termed the Experiences in Human-AI Relationships Scale (EHARS). This scale was meticulously designed to quantify attachment-related tendencies toward AI, capturing both the need for emotional support and the inclination toward emotional distance. The scale’s validity emerges from rigorous survey procedures involving diverse participants, reflecting a broad spectrum of human responses to AI entities. This quantification is critical in moving beyond anecdotal accounts and establishing a scientific foundation for future explorations.

One of the striking revelations from the EHARS-based study is the degree to which individuals seek and perceive emotional sustenance from AI. Nearly 75% of participants reported turning to AI systems for advice, indicating not merely a utilitarian engagement but an affective reliance. Furthermore, 39% of respondents considered AI to represent a stable and dependable presence in their lives. These figures challenge the assumption that AI is solely a functional tool and suggest that for many, AI serves as an emotional anchor, filling roles traditionally occupied by human relationships.

The investigation differentiates two primary dimensions of attachment in relation to AI: anxiety and avoidance. Attachment anxiety toward AI manifests in individuals’ desire for reassurance and a pronounced sensitivity to the adequacy of AI’s responses. Such individuals may experience distress when AI fails to provide comforting or thorough answers. Conversely, attachment avoidance reflects discomfort with emotional closeness to AI, prompting a preference for maintaining emotional distance and minimizing reliance on AI companionship. This dichotomy mirrors long-established patterns in human social attachment, highlighting the psychological parallels in man-machine interactions.

Importantly, the researchers emphasize that these findings do not necessarily imply that people are forming genuine emotional attachments to AI comparable to human relationships. Instead, the study reveals that the psychological frameworks developed for understanding human connections have explanatory power when applied to human-AI dynamics. This insight holds significant implications. It suggests that emotional responses to AI are structured and predictable, opening avenues for AI design to accommodate diverse emotional needs rather than adopting a monolithic approach.

This research is particularly consequential given the increasing integration of AI companion systems, therapeutic chatbots, and even romantic AI applications in modern society. The recognition of attachment-related tendencies can inform the ethical and functional design of AI interfaces. For example, AI systems could be tailored to provide enhanced empathetic engagement for users exhibiting high attachment anxiety, delivering reassurance and emotional validation. Conversely, for users characterized by attachment avoidance, AI could maintain appropriate emotional boundaries, reducing discomfort and fostering acceptable interaction distances.

Furthermore, this nuanced understanding advocates for transparency in AI systems, especially those simulating emotional relationships. Developers and policymakers must consider the risks of emotional overdependence or manipulation, particularly in vulnerable populations. By acknowledging the psychological impact of AI companionship, safeguards can be implemented to balance beneficial support with protection against potential harm arising from emotionally entangled AI use.

The utility of the EHARS extends beyond academic inquiry. Psychologists and developers can employ this scale to assess users’ emotional proclivities toward AI, allowing for dynamic adjustments in AI behavior and interaction strategies. Such adaptability could optimize user experience and mental well-being, ensuring AI aligns with the psychological predispositions of diverse individuals. This tailored AI interaction denotes a shift toward a more human-centered AI paradigm, fundamentally enhancing the quality and efficacy of digital companionship.

Fan Yang articulates the broader significance of this work: as AI systems become deeply embedded in everyday routines, people’s expectations transcend mere information retrieval to encompass emotional support and companionship. Understanding the psychological mechanisms underlying these interactions enables more thoughtful AI integration, fostering technology that resonates with human emotional life. The research thereby bridges gaps between social psychology, technology design, and ethical considerations, marking a crucial step forward in AI-human symbiosis.

Moreover, this work contributes to a broader societal discourse about technology’s role in shaping human experience. By framing human-AI relationships within the vocabulary of attachment theory, the research invites reconsideration of how emotional bonds with technology develop and influence behavior. It provokes reflection on future trajectories where AI may become not only tools but also relational agents, reshaping social landscapes and psychological architectures in profound ways.

In conclusion, the Waseda University team’s innovative application of attachment theory to human-AI relationships advances our comprehension of the emotional landscapes navigated in an increasingly digital world. Their empirical findings underline the complexity and variability of human emotional engagement with AI, paving the way for ethically informed, psychologically attuned AI systems. As AI continues to evolve, such interdisciplinary research will be indispensable in guiding the creation of technology that genuinely supports human well-being on emotional as well as cognitive levels.

Subject of Research: People

Article Title: Using attachment theory to conceptualize and measure the experiences in human-AI relationships

News Publication Date: 9-May-2025

Web References:

https://doi.org/10.1007/s12144-025-07917-6

References:

Fan Yang and Atsushi Oshio, “Using attachment theory to conceptualize and measure the experiences in human-AI relationships,” Current Psychology, May 9, 2025. DOI: 10.1007/s12144-025-07917-6

Image Credits:

Mr. Fan Yang, Waseda University, Japan

Keywords:

Psychological science, Clinical psychology, Mental health, Affective disorders, Artificial intelligence