Setting the stage for a new era of immersive displays, researchers are one step closer to mixing the real and virtual worlds in an ordinary pair of eyeglasses using high-definition 3D holographic images, according to a study led by Princeton University researchers.

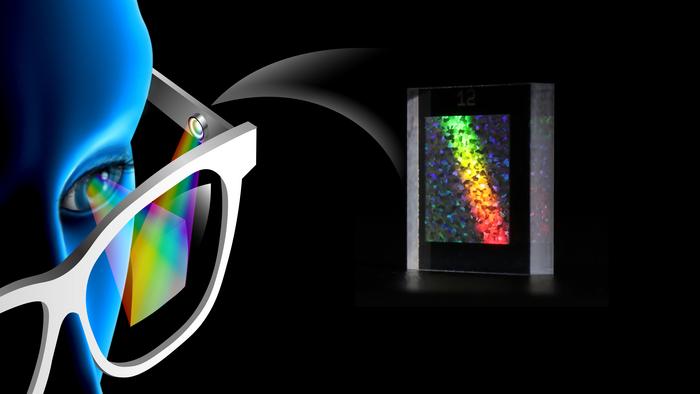

Credit: Illustration by Liz Sabol, photo by Nathan Matsuda

Setting the stage for a new era of immersive displays, researchers are one step closer to mixing the real and virtual worlds in an ordinary pair of eyeglasses using high-definition 3D holographic images, according to a study led by Princeton University researchers.

Holographic images have real depth because they are three dimensional, whereas monitors merely simulate depth on a 2D screen. Because we see in three dimensions, holographic images could be integrated seamlessly into our normal view of the everyday world.

The result is a virtual and augmented reality display that has the potential to be truly immersive, the kind where you can move your head normally and never lose the holographic images from view. “To get a similar experience using a monitor, you would need to sit right in front of a cinema screen,” said Felix Heide, assistant professor of computer science and senior author on a paper published April 22 in Nature Communications.

And you wouldn’t need to wear a screen in front of your eyes to get this immersive experience. Optical elements required to create these images are tiny and could potentially fit on a regular pair of glasses. Virtual reality displays that use a monitor, as current displays do, require a full headset. And they tend to be bulky because they need to accommodate a screen and the hardware necessary to operate it.

“Holography could make virtual and augmented reality displays easily usable, wearable and ultrathin,” said Heide. They could transform how we interact with our environments, everything from getting directions while driving, to monitoring a patient during surgery, to accessing plumbing instructions while doing a home repair.

One of the most important challenges is quality. Holographic images are created by a small chip-like device called a spatial light modulator. Until now, these modulators could only create images that are either small and clear or large and fuzzy. This tradeoff between image size and clarity results in a narrow field of view, too narrow to give the user an immersive experience. “If you look towards the corners of the display, the whole image may disappear,” said Nathan Matsuda, research scientist at Meta and co-author on the paper.

Heide, Matsuda and Ethan Tseng, doctoral student in computer science, have created a device to improve image quality and potentially solve this problem. Along with their collaborators, they built a second optical element to work in tandem with the spatial light modulator. Their device filters the light from the spatial light modulator to expand the field of view while preserving the stability and fidelity of the image. It creates a larger image with only a minimal drop in quality.

Image quality has been a core challenge preventing the practical applications of holographic displays, said Matsuda. “The research brings us one step closer to resolving this challenge,” he said.

The new optical element is like a very small custom-built piece of frosted glass, said Heide. The pattern etched into the frosted glass is the key. Designed using AI and optical techniques, the etched surface scatters light created by the spatial light modulator in a very precise way, pushing some elements of an image into frequency bands that are not easily perceived by the human eye. This improves the quality of the holographic image and expands the field of view.

Still, hurdles to making a working holographic display remain. The image quality isn’t yet perfect, said Heide, and the fabrication process for the optical elements needs to be improved. “A lot of technology has to come together to make this feasible,” said Heide. “But this research shows a path forward.”

The paper, “Neural Etendue Expander for Ultra-Wide-Angle High-Fidelity Holographic Display” was published April 22 in Nature Communications. In addition to Heide and Tseng, co-authors from Princeton include Seung-Hwan Baek and Praneeth Chakravarthula. In addition to Matsuda, co-authors from Meta Research are Grace Kuo, Andrew Maimone, Florian Schiffers, and Douglas Lanman. Qiang Fu and Wolfgang Heidrich from the Visual Computing Center at King Abdullah University of Science and Technology in Saudi Arabia also contributed. The work was supported by Princeton University’s Imaging and Analysis Center and the King Abdullah University of Science and Technology’s Nanofabrication Core Lab.

Journal

Nature Communications

Method of Research

Experimental study

Subject of Research

Not applicable

Article Title

Neural étendue expander for ultra-wide-angle high-fidelity holographic display

Article Publication Date

22-Apr-2024