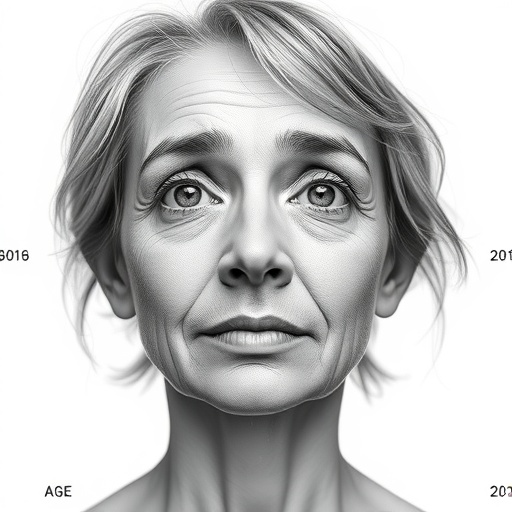

In the intricate domain of cognitive psychology and human perception, a groundbreaking study led by researchers Ji, L., Liao, S., and Hayward, W.G. sheds new light on the complex interplay between emotional expression, perceived age, and observer characteristics. Published in BMC Psychology in 2025, this exploration dives deep into how our judgments about age are far from objective, being significantly influenced by the facial emotions we witness and the age groups of both the subject and observer. The findings unveil a nuanced psychological mechanism with implications ranging from social interaction dynamics to biometric and AI facial recognition technologies.

Human beings have always been adept at gleaning cues about others’ ages, an ability crucial for social communication, evolutionary strategies, and legal or societal roles. Yet, this seemingly straightforward task masks a labyrinth of cognitive biases and sensory processing nuances. The conventional scientific wisdom holds that age estimation depends primarily on physical attributes such as skin texture, wrinkles, or hair color. However, Ji and colleagues challenge this notion by demonstrating that emotional expressions on faces dramatically modulate these age perceptions—and this modulation is neither uniform nor static.

The seminal aspect of their research lies in the dual modulation effect: the perceived age is not only swayed by the kind of emotional expression portrayed (be it happiness, anger, sadness, or neutrality) but critically depends on the intrinsic age of the face being observed and the age group of the participant making the judgment. This triangulation of factors introduces a sophisticated layer that broadens our understanding of social cognition. The research reveals that younger observers tend to estimate ages differently than older observers when evaluating emotional faces, and the emotional expression itself can make a youthful face appear older or an older face appear younger, all in a context-dependent fashion.

Technically, the methodology relied on a comprehensive dataset comprising facial images stratified across age groups, each expressing a controlled array of emotions. Participants spanning a broad age spectrum were tasked to estimate the ages of these faces under standardized conditions. Advanced statistical models and psychophysical analyses elucidated patterns that transcended mere guessing errors, pointing instead toward a systemic cognitive bias influenced by emotional salience.

At the heart of this study is the revelation that happiness, often linked to “youthful” attributes such as brighter eyes and fuller cheeks, paradoxically sometimes caused faces to be perceived as younger, but this was contingent on the observer’s age. Conversely, expressions of sadness or anger tended to augment perceived age in specific age brackets, revealing that emotional valence acts as a cognitive filter on age perception rather than just an affective signal. Disentangling these effects required meticulous control for confounding factors such as ethnicity, gender, and baseline attractiveness, which the researchers carefully accounted for.

From a neuroscientific perspective, such findings can be linked to differential activation patterns in the fusiform face area and the amygdala, brain regions implicated in face processing and emotional evaluation. The study indirectly supports the hypothesis that the brain’s appraisal of age is integrally tied to affective processing streams, suggesting a shared neural coding pathway that might be exploited for both evolutionary advantages and social signaling.

The implications of this research transcend academic theory, potentially revolutionizing how artificial intelligence interprets human faces. Current AI age estimation algorithms often rely heavily on static feature extraction without incorporating emotional context or the demographic profile of the person analyzing the image. Integrating an understanding of emotional modulation into AI could lead to systems with greater accuracy, reducing biases in facial recognition applications such as security, healthcare diagnostics, and personalized marketing.

In addition, this research prompts a reevaluation of social biases related to age. For example, in professional or interpersonal domains, misestimating someone’s age based on their emotional expression might introduce unfair stereotypes or influence decision-making processes. Awareness of this bias could inform better human resource practices and enhance social empathy by normalizing the idea that apparent age is a moving target shaped by emotional states.

Moreover, this study contributes to the broader psychological discourse on how humans interpret visual stimuli. Emotional expressions do not exist in a vacuum but interact dynamically with other facial metrics. This integrative approach encourages future studies to consider multidimensional analyses of face perception, extending beyond age to attributes like trustworthiness, health, and personality, all of which are partly inferred from facial cues combined with emotional information.

Interestingly, the modulation effect was found to be bidirectional and non-linear, implying that the same emotional expression does not exert identical effects across all age ranges. For instance, a neutral face might be perceived as older than a happy face by young participants but might be judged differently by older adults. This complexity indicates that human perception systems are more sophisticated and context-sensitive than previously acknowledged, emphasizing adaptive mechanisms tuned to social environments and life stages.

In conclusion, Ji, L., Liao, S., and Hayward, W.G.’s study fundamentally shifts how we conceptualize age estimation by highlighting the pivotal role of emotional expression and observer age. It paves the way for transformative applications in technology and deepens our appreciation for the mental operations underlying everyday social judgments. As the digital era increasingly mediates human interaction through virtual avatars and AI interfaces, understanding these biases becomes paramount in fostering authentic and equitable communication.

This nexus of affective science, perceptual psychology, and technology exemplifies the richness of interdisciplinary research. By decoding the subtleties of how emotions tint our perception of time reflected in the human face, the study invites us to recognize not just the complexity of our visual world but the profound empathy embedded in our judgment processes—a testament to the rich tapestry of human cognitive experience.

Subject of Research: The interaction between emotional facial expressions, face age, and observer age in modulating age perception.

Article Title: The effect of emotional expression on age estimates is modulated by face age and participant age.

Article References: Ji, L., Liao, S. & Hayward, W.G. The effect of emotional expression on age estimates is modulated by face age and participant age. BMC Psychol 13, 1035 (2025). https://doi.org/10.1186/s40359-025-03353-0

Image Credits: AI Generated