In a breakthrough that could revolutionize the fields of human-computer interaction, neuromuscular diagnostics, and wearable technology, researchers have unveiled a novel conformal surface-electromyography (sEMG) platform capable of precisely mapping facial muscle activity and predicting human facial expressions. This innovation, recently described in a study published in npj Flexible Electronics, represents a significant leap in how facial muscle signals can be captured non-invasively, interpreted, and utilized for applications spanning healthcare, augmented reality, and communication technologies.

At the heart of this advancement lies the integration of flexible electronics with cutting-edge electrophysiological techniques, enabling the measurement of subtle muscular activations on the human face with an unprecedented level of spatial resolution and sensitivity. Traditional electromyography methods often rely on rigid electrodes that poorly conform to the curved and dynamic surfaces of the face, leading to signal degradation and discomfort. By contrast, the new conformal sEMG platform employs ultrathin, stretchable electronic circuits that intimately adhere to the skin, maintaining close contact through facial movements without impeding natural expression.

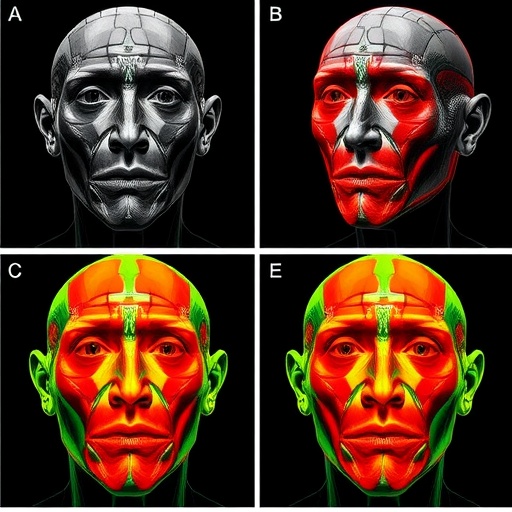

The design of this wearable system hinges on a matrix arrangement of conductive sensors that harness biocompatible materials engineered to flex and stretch seamlessly with facial contours. Such material innovation ensures stable, high-fidelity recordings over extended periods, overcoming one of the major challenges in capturing electromyographic data from the complex musculature responsible for human expressions. These technical attributes translate into a robust platform that can continuously monitor electrical activity generated by facial muscles as they engage in a myriad of expressions.

Processing and interpreting the vast data collected by the sensor array required sophisticated algorithmic frameworks. The research team developed advanced machine learning models capable of parsing nuanced muscle activation patterns and correlating them with specific facial expressions and microexpressions. These predictive models not only classify expressions but also anticipate subtle transitions, offering real-time insight into the user’s emotional state and communication intent.

This capability opens up exciting possibilities for enhancing interactive technologies. For example, augmented and virtual reality systems could employ this platform to mirror a user’s emotion in an avatar more precisely, leading to richer and more authentic virtual social interactions. Similarly, assistive communication devices for individuals with speech impairments could harness facial muscle signals to generate speech or commands, significantly improving quality of life and independence.

From a clinical perspective, the conformal sEMG technology presents promising avenues for diagnosis and rehabilitation. Conditions that affect facial nerve function, such as Bell’s palsy or post-stroke hemiplegia, could be monitored continuously through easy-to-wear, lightweight facial patches. This continuous data stream would empower clinicians with detailed insights into muscle activation dynamics and recovery progress, potentially tailoring therapies more effectively. Moreover, the platform can be adapted for biofeedback applications, helping patients retrain impaired muscles by providing immediate electrical feedback during rehabilitation exercises.

The study’s experimental protocols demonstrated the platform’s capability to successfully capture distinct activation patterns across multiple facial muscles simultaneously. High spatial density of sensors allowed for fine-grained mapping of muscle groups such as the zygomaticus major, orbicularis oculi, and frontalis — key muscles responsible for characteristic expressions like smiles, frowns, and surprise. The researchers validated these measurements against standardized facial expression datasets and showed compelling accuracy in decoding expressions from sEMG signals alone.

Critically, this technology addresses longstanding obstacles related to skin-electrode interface stability and motion artifacts by leveraging flexible substrates and stretchable interconnects. This innovation means that even spontaneous facial movements and speech-related articulations do not compromise signal integrity, which has been a persistent issue in earlier rigid sEMG systems. The result is a versatile, durable platform suitable for real-world, day-long use, broadening the potential for continuous emotion and expression monitoring beyond controlled laboratory environments.

Potential privacy concerns accompany such sensitive bioelectrical monitoring advancements. The authors highlight the necessity for ethical frameworks governing the collection and use of facial biometric data to safeguard user autonomy and prevent misuse. Ensuring transparency and consent in deploying these platforms will be paramount, especially as integration into consumer electronics becomes increasingly feasible.

Looking forward, the research team envisions extending this technique to more complex decoding of affective states, integrating physiological data streams such as heart rate variability and galvanic skin response. By fusing multi-modal biometric data, future iterations could achieve holistic emotional and cognitive monitoring, with transformative implications across mental health diagnostics, personalized entertainment, and human-robot interaction.

Integration with neural networks optimized for temporal sequence analysis also points toward even greater predictive finesse. Capturing how expressions evolve dynamically over time rather than as isolated snapshots could enable anticipatory human-machine interfaces that respond proactively to subtle emotional cues. This trajectory underscores a vibrant interdisciplinary nexus where materials science, bioelectronics, computer vision, and affective computing converge.

In addition to practical applications, the ability to non-invasively decode facial muscle activations offers unprecedented windows into fundamental neuroscience. Understanding the linkages between neural commands, muscular responses, and emotional expression can inform psychological models of empathy, social cognition, and identity formation. The conformal sEMG platform thus promises not only technological impact but also significant contributions to basic science.

The success of this research rests upon an elegant synthesis of flexible device engineering and machine intelligence, set against a deep appreciation for the complexity of the human face as a communicative organ. It exemplifies how meticulous attention to biomechanical realities, combined with computational prowess, can yield innovations with broad societal reach. By translating invisible bioelectrical signals into actionable insights, this platform heralds a new era in wearable sensing and emotional computing.

As the field rapidly advances, continued collaboration between engineers, neuroscientists, ethicists, and clinicians will be essential to unlock the full potential of conformal surface electromyography. The promising results in facial muscle mapping and expression prediction are just the beginning of what such technologies might enable. From enriching virtual presence to enhancing healthcare outcomes, the ripple effects of this research will likely be felt across multiple domains.

In sum, this pioneering conformal sEMG platform presents a compelling embodiment of next-generation wearable bioelectronics. Its innovative approach to capturing, interpreting, and predicting complex facial muscular activity with high fidelity and comfort charts an exciting path forward in human-machine interfaces, personalized medicine, and emotional intelligence technologies. This landmark study sets a new standard and invites the scientific community to explore increasingly sophisticated biosensing solutions integrated seamlessly with daily life.

Subject of Research: Facial muscle mapping and expression prediction using a conformal surface-electromyography platform

Article Title: Facial muscle mapping and expression prediction using a conformal surface-electromyography platform

Article References:

Man, H., Funk, P.F., Ben-Dov, D. et al. Facial muscle mapping and expression prediction using a conformal surface-electromyography platform.

npj Flex Electron 9, 71 (2025). https://doi.org/10.1038/s41528-025-00453-0