In the realm of robotics, the intricate problem of packing objects into confined spaces—think of loading a suitcase for a summer vacation—presents significant computational challenges. Whereas humans intuitively leverage visual and geometric cognition to efficiently arrange items without damaging them, translating this nuanced decision-making to robots has proven remarkably complex. The core difficulty lies in a robot’s need to consider innumerable potential actions and constraints simultaneously, a task that can easily overwhelm conventional planning algorithms. However, a recent breakthrough from researchers at MIT and NVIDIA Research promises to revolutionize robotic packing through an innovative algorithmic approach that dramatically accelerates this planning process.

Central to this advancement is a new algorithm called cuTAMP, which stands for CUDA-accelerated Task and Motion Planning. Unlike traditional methods which evaluate potential robot movements sequentially, cuTAMP harnesses the power of modern graphics processing units (GPUs) to simultaneously simulate and optimize thousands of possible manipulation strategies. This parallel computing approach effectively transforms a problem that might take minutes or hours into one solvable within mere seconds. By processing immense batches of potential actions together, the robot can “think ahead,” narrowing down the solution space quickly and reliably.

At its core, the packing problem for robots is framed as a task and motion planning (TAMP) challenge. Task planning involves deciding on a sequence of high-level actions, such as picking up an object or placing it in a particular position. Motion planning, on the other hand, determines the precise parameters governing these actions—joint angles, gripper orientation, and arm trajectories—that enable the robot to execute the plan safely and efficiently. The complexity emerges from the expansive number of possible sequences and configurations, especially when considerations like collision avoidance, fragile object handling, and packing order constraints are imposed.

Conventional TAMP strategies often rely on sequential sampling and evaluation of individual actions, a method that is not only slow but prone to exploring many fruitless possibilities in the vast search space. The cuTAMP algorithm addresses this inefficiency by combining a guided sampling strategy with parallelized optimization techniques. During the sampling phase, instead of selecting candidate solutions randomly, the algorithm intelligently restricts its exploration to areas of the solution space where constraints are more likely to be satisfied. This leads to an initial pool of promising candidate solutions that provide a strong foundation for further refinement.

Once a diverse set of feasible samples is generated, cuTAMP concurrently optimizes all candidates by evaluating their “cost”—a composite metric reflecting factors such as collision potential, adherence to robot motion constraints, and fulfillment of user-specified objectives. This parallel optimization iteratively enhances these candidates, pruning the less viable options and zeroing in on high-quality plans. Together, these phases enable the algorithm to comprehensively yet efficiently search through a staggering array of possibilities, significantly reducing the time required to identify actionable plans.

Harnessing specialized hardware accelerators like GPUs is fundamental to this achievement. GPUs are uniquely suited for highly parallel workloads, allowing cuTAMP to process hundreds or thousands of solution candidates concurrently with roughly the same computational expense as evaluating a single one sequentially. This hardware-software synergy is what makes such rapid planning feasible in real-world applications. When tested on simulated Tetris-like packing tasks, the algorithm consistently produced robust, collision-free packing solutions in just a few seconds, outperforming traditional sequential planners that struggled with efficiency.

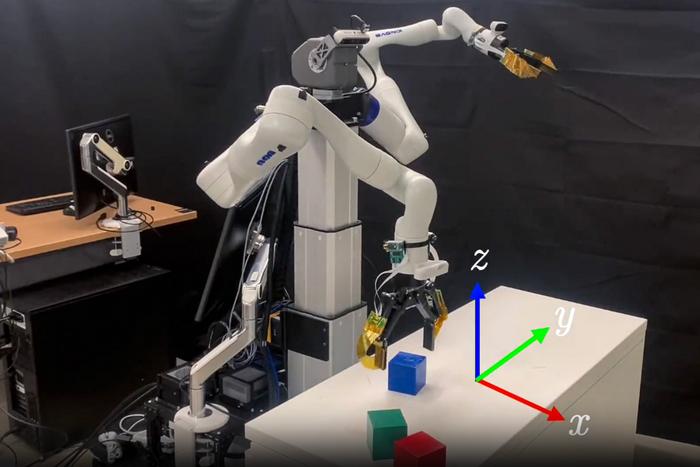

Beyond simulations, cuTAMP’s capabilities have been validated on physical robotic systems as well. Experiments employing a robotic arm at MIT and a humanoid robot at NVIDIA demonstrated its ability to find executable packing plans within half a minute—fast enough to be practical for industrial and logistical operations. Importantly, cuTAMP is not reliant on machine learning or training data; it is model-based and rule-driven, which means it can be deployed immediately to novel problems without retraining. This adaptability greatly enhances its versatility across diverse robotic platforms and environments.

The implications of this development are significant, especially for industries where robotic efficiency and safety are paramount. Factories and warehouses often deal with heterogeneous objects of varying shapes and fragility packed into constrained spaces. cuTAMP enables robots to execute complex packing maneuvers with precision and rapidity, reducing downtime and operational costs while mitigating risks of object damage or workspace collisions. This ability to produce high-quality plans quickly aligns perfectly with real-world demands where decision latency directly impacts productivity and profitability.

Furthermore, the general design of cuTAMP allows it to extend beyond packing tasks. The algorithmic framework is flexible enough to incorporate different robotic skills and manipulate tools, potentially automating a wide variety of multi-step tasks. The researchers envision incorporating advances in natural language processing and vision-language models into cuTAMP’s framework, enabling robots to interpret voice commands and visual inputs to generate and execute detailed plans on the fly. Such integration would mark a substantial leap toward truly autonomous robotic operation capable of interacting intuitively with humans.

In summary, this novel algorithmic approach promises to redefine robotic planning by shrinking computational timelines from impractical to immediate. By leveraging parallel computation through GPUs and combining intelligent sampling with simultaneous optimization, cuTAMP equips robots with the ability to rapidly analyze thousands of possible action sequences and distill them into reliable, collision-free packing plans. This breakthrough not only opens doors to more efficient industrial automation but also lays groundwork for more flexible and responsive robotic agents across myriad applications.

This seminal research will be presented at the Robotics: Science and Systems Conference and represents a collaborative effort spanning MIT, NVIDIA Research, and the University of Utah. It showcases the power of interdisciplinary cooperation, combining expertise in computer science, robotics, and advanced computational hardware to tackle longstanding challenges in robotic manipulation. As robotic systems continue to evolve, innovations like cuTAMP offer a glimpse of a future where robots seamlessly assist humans in complex physical tasks with speed and precision previously unimaginable.

The work receives support from notable institutions including the National Science Foundation, Air Force Office for Scientific Research, Office of Naval Research, MIT Quest for Intelligence, NVIDIA, and the Robotics and Artificial Intelligence Institute. Their investment highlights the growing recognition of robotics as an essential component of technological progress and economic competitiveness worldwide.

Subject of Research:

Robotic task and motion planning algorithms for efficient packing and manipulation.

Article Title:

CUDA-Accelerated Task and Motion Planning Accelerates Robotic Packing Solutions

News Publication Date:

Not explicitly stated; inferred as November 2024 based on publication details.

Web References:

https://arxiv.org/pdf/2411.11833

https://arxiv.org/abs/2411.08253

References:

Garrett, C., Shen, W., Kumar, N., Goyal, A., Hermans, T., Kaelbling, L. P., Lozano-Pérez, T., Ramos, F. (2024). CUDA-Accelerated Task and Motion Planning. ArXiv preprint arXiv:2411.11833.

Image Credits:

MIT