Quantum computing stands at the forefront of technological innovation, promising to revolutionize how we solve some of the most complex problems that conventional computers struggle with. Unlike classical machines that rely strictly on bits, which exist in a binary state of either 0 or 1, quantum computers leverage quantum bits, or qubits, that exploit the principles of quantum mechanics. This difference not only accelerates certain computations exponentially but introduces a level of complexity – and fragility – that researchers worldwide are racing to overcome.

The fundamental challenge with qubits lies in their inherent instability. These quantum bits are exquisitely sensitive to environmental disturbances, such as electromagnetic noise or temperature fluctuations, which can cause them to lose coherence and thus the quantum information they carry. This phenomenon, known as decoherence, drastically limits the practical size and computational power of current quantum processors. Stabilizing these qubits is paramount to progressing beyond experimental setups to fully operational quantum machines.

Jacob Benestad, a recent PhD graduate from the Norwegian University of Science and Technology’s Department of Physics, has been at the vanguard of this effort. His doctoral research dove deeply into the physics that govern qubit behavior and how these units can be maintained within a delicate balance that preserves their quantum states long enough to perform useful calculations. His work is critical to the maturation of quantum computing technology.

Quantum computers differ from traditional devices because qubits can exist not only in the binary states of 0 and 1 but also in a superposition of states. This superposition allows quantum algorithms to consider a vast number of possibilities simultaneously. Additionally, qubits can become entangled with each other, meaning the state of one qubit can instantaneously influence another, no matter the distance separating them. This phenomenon enables complex calculations that are infeasible for classical computers.

Despite this quantum advantage, the readout process—the step where the quantum state is measured and translated into usable output—is inherently probabilistic. When a measurement is taken, the superposition collapses into a definite state randomly selected from all possible outcomes. This randomness implies that multiple iterations are often needed to extract a reliable answer, which diminishes quantum computing’s efficiency in problems where all possible results are equally important.

Quantum computers excel particularly in solving specialized problems that require optimization or simulation where the solution represents a single correct answer amongst an astronomical number of possibilities. Tasks such as molecular modeling, cryptographic code-breaking, and large-scale optimization challenges stand to benefit most from quantum computational power, provided the qubits involved maintain coherence long enough for computations to complete.

A major hurdle remains the qubit’s sensitivity to environmental interference. Jeroen Danon, a professor at NTNU’s Department of Physics and mentor to Benestad, highlights that even minuscule external disturbances can spoil the fragile quantum states. Overcoming these disturbances requires ingenious techniques that actively monitor and correct the qubit states in real time, sharply reducing errors and prolonging operational lifetimes.

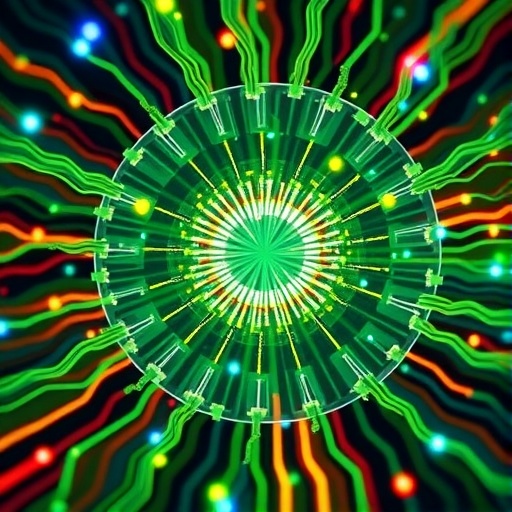

In this pursuit, Benestad and an international team collaborated to develop a new real-time feedback mechanism using an FPGA (Field Programmable Gate Array) controller. This smart controller continuously tracks qubit frequencies and dynamically adjusts them to counteract environmental noise. Essentially, the controller acts like an adaptive tuner, fine-tuning each qubit’s frequency to maintain resonance despite external perturbations.

The analogy employed by the researchers likens a qubit to a guitar string. Just as a guitar string produces beautiful music only when perfectly tuned, qubits generate accurate quantum information only when their energy levels – or frequency – are stabilized. Any detuning weakens their performance. The breakthrough here is the ability to “retune” the qubit’s frequency in real time while the qubit is active, significantly enhancing its coherence time and fidelity of quantum operations.

This continuous calibration and frequency adjustment afford multiple advantages. By extending the lifetimes of qubits, the method enables more complex quantum algorithms to be executed before decoherence sets in, thereby pushing the boundaries of what quantum processors can achieve. Moreover, it increases operational precision and the overall robustness of the quantum computations, mitigating the error rates that have been a persistent stumbling block in quantum technology development.

The research was a collaborative effort between NTNU, Leiden University in the Netherlands, the Niels Bohr Institute at the University of Copenhagen, and the Massachusetts Institute of Technology, showcasing an impressive example of international scientific cooperation aimed at accelerating quantum innovation. Their findings mark a significant step toward building scalable quantum processors capable of solving practical problems.

The implications of this work extend far beyond academic interest. Stable, reliable qubits are the cornerstone for quantum computing applications that could transform industries ranging from pharmaceuticals to finance. As qubit calibration improves, quantum systems become more viable for real-world deployments, ultimately bringing us closer to the long-awaited quantum advantage where these machines outperform classical counterparts on meaningful tasks.

As the quantum computing community moves forward, innovations like dynamic Hamiltonian tracking—a sophisticated method based on binary search principles for qubit calibration—will be essential to overcoming technical limitations. Maintaining qubit stability in fluctuating environments may well determine the pace at which quantum computing achieves commercial and scientific breakthroughs.

Jacob Benestad’s pioneering research not only addresses one of the most critical bottlenecks in quantum technology but also provides a practical framework for researchers to build smarter, more adaptive quantum systems. His contributions underscore the blend of advanced physics and engineering now driving the quantum revolution, promising a future where quantum computers fulfill their transformative potential.

Subject of Research: Not applicable

Article Title: Efficient Qubit Calibration by Binary-Search Hamiltonian Tracking

News Publication Date: 26-Aug-2025

Web References: https://link.aps.org/doi/10.1103/77qg-p68k

References: F. Berritta, J. Benestad, L. Pahl, M. Mathews, J.A. Krzywda, R. Assouly, Y. Sung, D.K. Kim, B.M. Niedzielski, K. Serniak, M.E. Schwartz, J.L. Yoder, A. Chatterjee, J.A. Grover, J. Danon, W.D. Oliver, and F. Kuemmeth. Efficient Qubit Calibration by Binary-Search Hamiltonian Tracking. PRX Quantum 6, 030335, Aug 2025. DOI: 10.1103/77qg-p

Image Credits: Photo by Fabrizio Berritta, University of Copenhagen

Keywords: quantum computing, qubits, quantum bits, quantum coherence, qubit calibration, superposition, quantum entanglement, FPGA control, Hamiltonian tracking, decoherence mitigation, quantum processor, real-time feedback, qubit stability