Artificial intelligence software has been developed to rapidly analyze animal behavior so that behaviors can be more precisely linked to the activity of individual brain circuits and neurons, researchers in Seattle report.

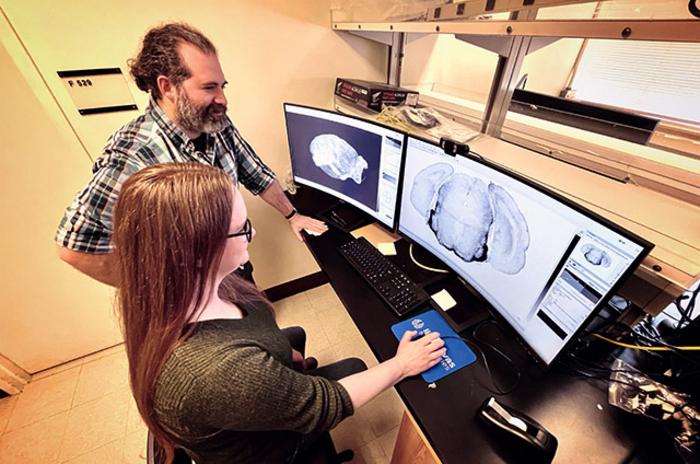

Credit: Michael McCarthy/UW Medicine

Artificial intelligence software has been developed to rapidly analyze animal behavior so that behaviors can be more precisely linked to the activity of individual brain circuits and neurons, researchers in Seattle report.

The program promises not only to speed research into the neurobiology of behavior, but also to enable comparison and reconcile results that disagree due to differences in how individual laboratories observe, analyze and classify behaviors, said Sam Golden, assistant professor of biological structure at the University of Washington School of Medicine.

“The approach allows labs to develop behavioral procedures however they want and makes it possible to draw general comparisons between the results of studies that use different behavioral approaches,” he said.

A paper describing the program appears in the journal Nature Neuroscience. Golden and Simon Nilsson, a postdoctoral fellow in the Golden lab, are the paper’s senior authors. The first author is Nastacia Goodwin, a graduate student in the lab.

The study of the neural activity behind animal behavior has led to major advances in the understanding and treatment of such human disorders as addiction, anxiety and depression.

Much of this work is based on observations painstakingly recorded by individual researchers who watch animals in the lab and note their physical responses to different situations, then correlate that behavior with changes in brain activity.

For example, to study the neurobiology of aggression, researchers might place two mice in an enclosed space and record signs of aggression. These would typically include observations of the animals’ physical proximity to one another, their posture, and physical displays such as rapid twitching, or rattling, of the tail.

Annotating and classifying such behaviors is an exacting, protracted task. It can be difficult to accurately recognize and chronicle important details, Golden said. “Social behavior is very complicated, happens very fast and often is nuanced, so a lot of its components can be lost when an individual is observing it.”

To automate this process, researchers have developed AI-based systems to track components of an animal’s behavior and automatically classify the behavior, for example, as aggressive or submissive.

Because these programs can also record details more rapidly than a human, it is much more likely that an action can be closely correlated with neural activity, which typically occurs in milliseconds.

One such program, developed by Nilsson and Goodwin, is called SimBA, for Simple Behavioral Analysis. The open-source program features an easy-to-use graphical interface and requires no special computer skills to use. It has been widely adopted by behavioral scientists.

“Although we built SimBA for a rodent lab, we immediately started getting emails from all kinds of labs: wasp labs, moth labs, zebrafish labs,” Goodwin said.

But as more labs used these programs, the researchers found that similar experiments were yielding vastly different results.

“It became apparent that how any one lab or any one person defines behavior is pretty subjective, even when attempting to replicate well-known procedures,” Golden said.

Moreover, accounting for these differences was difficult because it is often unclear how AI systems arrive at their results, their calculations occurring in what is often characterized as “a black box.”

Hoping to explain these differences, Goodwin and Nilsson incorporated into SimBA a machine-learning explainability approach that produces what is called the Shapely Additive exPlanations (SHAP) score.

Essentially what this explainability approach does is determine how removing one feature used to classify a behavior, say tail rattling, changes the probability of an accurate prediction by the computer.

By removing different features from thousands of different combinations, SHAP can determine how much predictive strength is provided by any individual feature used in the algorithm that is classifying the behavior. The combination of these SHAP values then quantitatively defines the behavior, removing the subjectivity in behavioral descriptions.

“Now we can compare (different labs’) respective behavioral protocols using SimBA and see whether we’re looking, objectively, at the same or different behavior,” Golden said.

“This approach allows labs to design experiments however they like, but because you can now directly compare behavioral results from labs that are using different behavioral definitions, you can draw clearer conclusions between their results. Previously, inconsistent neural data could have been attributed to many confounds, and now we can cleanly rule out behavioral differences as we strive for cross-lab reproducibility and interpretability” Golden said.

This research was supported by grants from the National Institutes of Health (K08MH123791), the National Institute on Drug Abuse (R00DA045662, R01DA059374, P30DA048736), National Institute of Mental Health (1F31MH125587, F31AA025827, F32MH125634), National Institute of General Medicine Sciences (R35GM146751), Brain & Behavior Research Foundation, Burroughs Wellcome Fund, Simons Foundation, and Washington Research Foundation.

Journal

Nature Neuroscience

Method of Research

Experimental study

Subject of Research

Animals

Article Title

Simple Behavioral Analysis (SimBA) as a platform for explainable machine learning in behavioral neuroscience

COI Statement

The authors declare no competing interests.