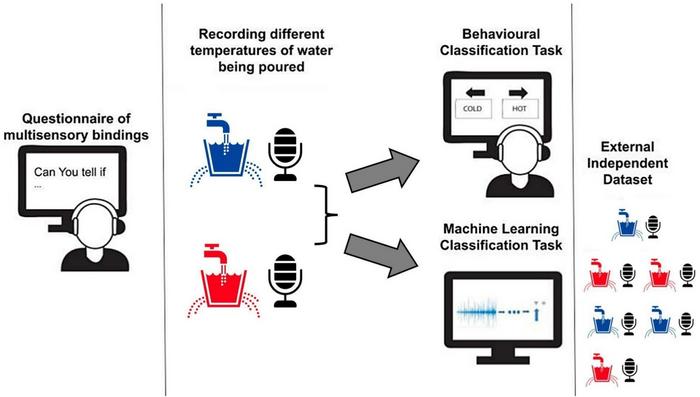

Scientists from the Ivcher Institute for Brain, Cognition, and Technology (BCT Institute) at Reichman University (IDC Herzliya) explored a largely unrecognized perceptual ability and utilized machine learning to clarify the dynamics of cross-modal perception; the experience of interactions between multiple different sensory modalities. In a recently published study, the team investigated the possibility for humans to detect the thermal properties of water (water temperature) through its sound, and whether or not it happens consciously. Harnessing principles of multisensory integration (the way the brain combines information from various sensory modalities to form a unified perception of the environment), the team explored the potential of multisensory thermal perception. They also employed a pre-trained deep neural network (DNN) and a classification algorithm (a support vector machine) to examine whether machine learning could successfully and consistently classify audio recordings of water at different temperatures being poured, and create a mapping of these thermal properties physically encoded in sound.

Credit: IVCHER INSTITUTE FOR BRAIN COGNITION AND TECHNOLOGY, REICHMAN UNIVERSITY

Scientists from the Ivcher Institute for Brain, Cognition, and Technology (BCT Institute) at Reichman University (IDC Herzliya) explored a largely unrecognized perceptual ability and utilized machine learning to clarify the dynamics of cross-modal perception; the experience of interactions between multiple different sensory modalities. In a recently published study, the team investigated the possibility for humans to detect the thermal properties of water (water temperature) through its sound, and whether or not it happens consciously. Harnessing principles of multisensory integration (the way the brain combines information from various sensory modalities to form a unified perception of the environment), the team explored the potential of multisensory thermal perception. They also employed a pre-trained deep neural network (DNN) and a classification algorithm (a support vector machine) to examine whether machine learning could successfully and consistently classify audio recordings of water at different temperatures being poured, and create a mapping of these thermal properties physically encoded in sound.

“Temperature perception is pretty unique in comparison to other sensory experiences,” says Dr. Adi Snir, postdoctoral fellow at the BCT Institute and co-author of the study. “For vision and hearing we have dedicated sensory ‘organs’ like the eyes and ears, with temperature we rely on specialized receptors in the skin that respond to various temperature ranges which we experience as heat and coolness, but in the animal kingdom we know for example that snakes can actually ‘see’ body heat which allows them to identify prey ”. The question of whether multisensory perception of temperature extends to humans has been posed before. “Previous studies have explored this on a behavioral level” states Prof. Amir Amedi, founding Director of the BCT Institute. “These studies have shown that humans can hear a difference between hot liquids and cold liquids being poured, but not how or why this is possible” he explains.

The researchers set out first to replicate previous findings and confirm this surprising perceptual ability, as well as clarify whether or not this ability is innate or acquired, a question that has long been the subject of much debate. “We also wanted to investigate whether or not people are consciously aware of these differences in the sound properties of thermal differences” says Snir, “and also explore what characteristics of the sounds themselves allow for differentiation in perception” he adds. To accomplish this, the team used a pre-trained deep neural network (DNN) to characterize recordings of various temperatures of water being poured, a machine learning algorithm to classify the thermal properties of the water, and computational analysis of the auditory features of each recording. “What we saw is that participants were consistently able to discern water temperature through its sound, even when they didn’t believe that they could, which tells us this is likely an implicit skill acquired through exposure to auditory cues throughout life” Amedi explains, “simultaneously the machine learning model which was trained on recordings of hot and cold water showed a high accuracy in classifying the sounds”.

The results of the study demonstrate that humans have an ability to learn complex sensory mappings from everyday experiences, and that machine learning can help to clarify subtle perceptual phenomena. “The next step is to look into whether or not people will develop novel sensory maps in the brain for this experience, the way they do for vision, touch, and hearing” Amedi states. “In theory recent claims by Elon Musk regarding Neuralink creating superhuman abilities could become reality if you couple this same method with brain stimulation” he adds.

Scientists from the Ivcher Institute for Brain, Cognition, and Technology (BCT Institute) at Reichman University (IDC Herzliya) explored a largely unrecognized perceptual ability and utilized machine learning to clarify the dynamics of cross-modal perception; the experience of interactions between multiple different sensory modalities. In a recently published study, the team investigated the possibility for humans to detect the thermal properties of water (water temperature) through its sound, and whether or not it happens consciously. Harnessing principles of multisensory integration (the way the brain combines information from various sensory modalities to form a unified perception of the environment), the team explored the potential of multisensory thermal perception. They also employed a pre-trained deep neural network (DNN) and a classification algorithm (a support vector machine) to examine whether machine learning could successfully and consistently classify audio recordings of water at different temperatures being poured, and create a mapping of these thermal properties physically encoded in sound.

“Temperature perception is pretty unique in comparison to other sensory experiences,” says Dr. Adi Snir, postdoctoral fellow at the BCT Institute and co-author of the study. “For vision and hearing we have dedicated sensory ‘organs’ like the eyes and ears, with temperature we rely on specialized receptors in the skin that respond to various temperature ranges which we experience as heat and coolness, but in the animal kingdom we know for example that snakes can actually ‘see’ body heat which allows them to identify prey ”. The question of whether multisensory perception of temperature extends to humans has been posed before. “Previous studies have explored this on a behavioral level” states Prof. Amir Amedi, founding Director of the BCT Institute. “These studies have shown that humans can hear a difference between hot liquids and cold liquids being poured, but not how or why this is possible” he explains.

The researchers set out first to replicate previous findings and confirm this surprising perceptual ability, as well as clarify whether or not this ability is innate or acquired, a question that has long been the subject of much debate. “We also wanted to investigate whether or not people are consciously aware of these differences in the sound properties of thermal differences” says Snir, “and also explore what characteristics of the sounds themselves allow for differentiation in perception” he adds. To accomplish this, the team used a pre-trained deep neural network (DNN) to characterize recordings of various temperatures of water being poured, a machine learning algorithm to classify the thermal properties of the water, and computational analysis of the auditory features of each recording. “What we saw is that participants were consistently able to discern water temperature through its sound, even when they didn’t believe that they could, which tells us this is likely an implicit skill acquired through exposure to auditory cues throughout life” Amedi explains, “simultaneously the machine learning model which was trained on recordings of hot and cold water showed a high accuracy in classifying the sounds”.

The results of the study demonstrate that humans have an ability to learn complex sensory mappings from everyday experiences, and that machine learning can help to clarify subtle perceptual phenomena. “The next step is to look into whether or not people will develop novel sensory maps in the brain for this experience, the way they do for vision, touch, and hearing” Amedi states. “In theory recent claims by Elon Musk regarding Neuralink creating superhuman abilities could become reality if you couple this same method with brain stimulation” he adds.

Journal

Frontiers in Psychology

Method of Research

Experimental study

Subject of Research

People

Article Title

“Hearing temperatures: employing machine learning for elucidating the cross-modal perception of thermal properties through audition”

Article Publication Date

2-Aug-2024