In a groundbreaking fusion of artificial intelligence and psychological science, researchers have unveiled compelling evidence that large language models (LLMs), the cutting-edge AI systems capable of processing and generating human-like text, inherently grasp the complex structure of psychopathology. This revelation, detailed in a recent study published in Nature Mental Health, signifies a remarkable advance in understanding how AI can model intricate human mental health conditions, potentially revolutionizing diagnostics, treatment personalization, and the broader field of computational psychiatry.

The study, conducted by Kambeitz and colleagues, investigated whether large language models, trained on vast corpora of diverse text, implicitly encode the empirical dimensions of psychopathology that psychologists have painstakingly mapped over decades. Psychopathology, which encompasses a wide range of mental health disorders from depression and anxiety to schizophrenia and beyond, is traditionally understood through multi-dimensional frameworks reflecting symptom clusters, severity, and comorbidity patterns. Until now, no study had rigorously tested if such high-dimensional, nuanced clinical information could emerge naturally from AI models optimized purely on linguistic data.

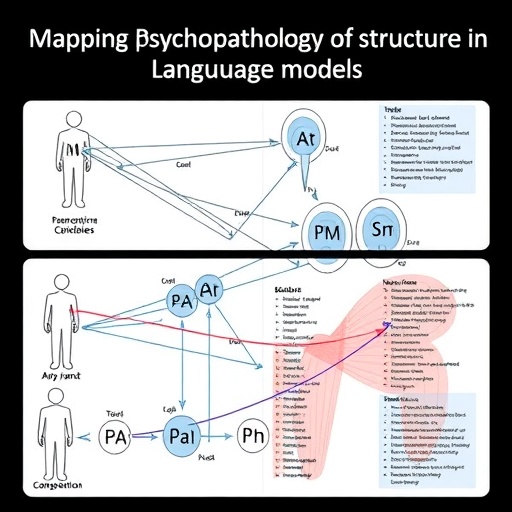

Leveraging state-of-the-art LLMs, such as GPT-based architectures, the researchers exposed these models to prompts designed to probe their internal representations of psychopathological concepts. Through sophisticated computational analyses, including embedding vector comparisons and semantic clustering techniques, the study revealed that LLMs not only differentiate between diverse mental disorders but also represent their interrelationships in ways that closely mirror empirical clinical structures. This alignment suggests that the models’ vast language training embeds latent knowledge congruent with psychiatric knowledge frameworks.

Crucially, the models demonstrated implicit encoding of well-established psychopathology dimensions such as internalizing and externalizing disorders, as well as nuanced symptom overlap that mirrors diagnostic comorbidity observed in clinical populations. This finding reflects the models’ capacity to internalize subtle patterns present in human discourse that detail how different psychopathological symptoms interact and coalesce into recognizable syndromes. Essentially, without explicit training on medical diagnoses, these language models have absorbed an echo of real-world mental health complexities.

The implications of this insight are profound. By unlocking the latent psychopathological structure within LLMs, researchers anticipate novel AI-driven tools that can assist clinicians in early detection of mental disorders through natural language interactions, symptom tracking, and sentiment analysis. These tools could analyze patient narratives, electronic health records, or social media data with unprecedented sensitivity and specificity, leveraging the models’ internalized clinical wisdom to flag emerging risks or refine diagnostic precision.

Moreover, the study highlights the potential for large language models to serve as a bridge between computational psychiatry and psychosocial research. Traditionally, psychopathology research relies heavily on structured clinical interviews and self-report scales, which are often resource-intensive and limited in scope. The ability of LLMs to conceptualize mental health conditions from unstructured, real-world language data heralds a new era of scalable, flexible research paradigms that harness big data and AI synergy.

From a technical perspective, the research employed advanced embedding alignment methodologies to quantify the degree of correspondence between the LLM-derived representations and clinically validated psychopathology constructs. These embeddings, numeric encodings that reflect semantic meaning, were analyzed using multidimensional scaling and network analysis, revealing clusters of symptom associations that paralleled psychiatric taxonomies like the DSM and Research Domain Criteria (RDoC).

Importantly, the study also engaged in rigorous validation protocols, cross-referencing LLM outputs with clinician-rated assessments and established psychometric instruments. This triangulation provided a robust framework affirming that the AI’s internal models are not superficial or coincidental but deeply rooted in clinically relevant structures recognized by mental health professionals worldwide.

Despite this breakthrough, the authors caution against premature clinical application. They emphasize that while LLMs capture the semantic structure of psychopathology, they do not possess genuine understanding or experiential insight into mental suffering. Ethical considerations, model biases, and the risk of overreliance on algorithmic outputs underscore the need for multidisciplinary frameworks integrating AI as a complementary tool rather than a standalone diagnostic entity.

The research also prompts fresh inquiries into the nature of language and mental health cognition. Since psychopathological syndromes manifest through language—as symptoms are described, social interactions unfold, and affective states are communicated—the alignment between linguistic models and clinical phenomena supports theories positing that language is a foundational medium for mental health phenomenology.

Future research avenues highlighted include enhancing model transparency and interpretability to better decode the AI’s psychopathology representations. Incorporating multimodal datasets, such as neuroimaging and behavioral measures alongside language data, could further enrich AI psychiatric models, moving toward holistic, integrative frameworks for understanding mental disorders.

Additionally, the findings open potential for AI-facilitated remote mental health monitoring and intervention, especially in underserved or stigmatized populations where access to traditional psychiatric care is limited. Language models could power chatbots or digital assistants that dynamically assess risk, provide psychoeducation, and triage cases for professional follow-up based on nuanced conversational cues.

This convergence of artificial intelligence and psychological science exemplifies the transformative power of interdisciplinary collaboration. By demonstrating that large language models inherently reflect the empirical structure of psychopathology, Kambeitz and colleagues propel the field toward novel paradigms that harness AI’s pattern recognition strengths to unravel the complex architecture of human mental ailments.

As these AI systems continue to evolve, their roles in mental health research, clinical practice, and public health are poised to deepen significantly. This study acts as a seminal reference point, underscoring the necessity for ongoing dialogue between psychiatrists, psychologists, AI developers, and ethicists to shape the future of mental health innovation responsibly, effectively, and humanely.

In conclusion, this pioneering research affirms that the comprehensive knowledge embedded within large language models transcends mere linguistic mimicry—capturing subtle, multidimensional facets of mental health disorders that align strikingly with empirical psychopathology. This insight not only enriches our understanding of AI capabilities but also charts an exciting course toward AI-augmented psychiatry, transforming mental health care in the years to come.

Subject of Research:

The study focuses on the intersection of artificial intelligence and psychopathology, specifically investigating how large language models represent the empirical structure of mental health disorders.

Article Title:

The empirical structure of psychopathology is represented in large language models.

Article References:

Kambeitz, J., Schiffman, J., Kambeitz-Ilankovic, L. et al. The empirical structure of psychopathology is represented in large language models. Nat. Mental Health (2025). https://doi.org/10.1038/s44220-025-00527-y

Image Credits: AI Generated

DOI: https://doi.org/10.1038/s44220-025-00527-y