Large language models (LLMs) have recently taken center stage in various decision-making scenarios, significantly reshaping how individuals engage with information and make choices. These sophisticated technologies, with their ability to process vast amounts of data and generate contextually relevant text, reveal capabilities that can sometimes seem “superhuman.” However, alongside this impressive prowess lies an intricate web of potential pitfalls and limitations that demand careful scrutiny. Understanding these challenges is crucial, particularly as LLMs become embedded in the fabric of daily decision-making processes.

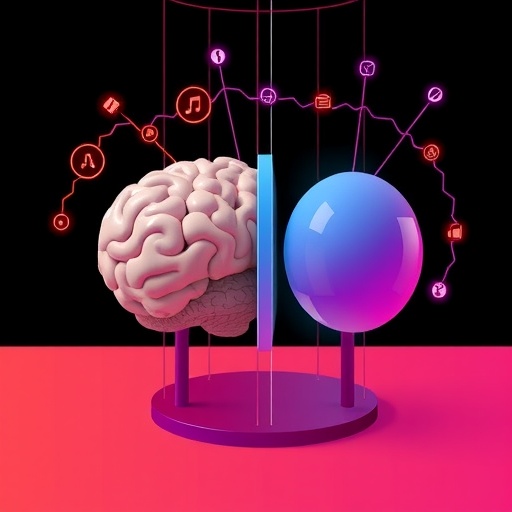

A critical lens through which to analyze LLM outputs is dual-process theory, a psychological framework that explains two distinct systems of thought: System 1 and System 2. System 1 is fast, instinctive, and emotional, characterized by heuristics and cognitive biases that can quickly influence decisions. In contrast, System 2 is more deliberate and logical, employing analytical reasoning to navigate complex scenarios. Intriguingly, LLMs, despite being machine learning models rather than human cognitive entities, exhibit behaviors reminiscent of both systems. By dissecting these behaviors, researchers are unearthing how LLMs function within decision-making paradigms.

When examining LLM responses, one can notice a marked tendency to reflect System-1-like behaviors. These models often mimic cognitive biases, leaning on probabilistic associations gleaned from their training data. For instance, an LLM might demonstrate confirmation bias by disproportionately emphasizing information that aligns with previously established patterns. This phenomenon raises questions about the reliability of LLMs as decision-support tools, especially when their outputs are inadvertently shaped by the biases present in the data they were trained on.

Moreover, LLMs have shown a propensity to employ heuristics in ways that resonate with System 1 thinking. This may lead to efficiency in producing responses quickly, but the trade-off is a susceptibility to inaccuracies and misjudgments. Users relying on LLM-generated information must remain vigilant, recognizing that these models, while adept at generating coherent narratives, are not immune to the same errors that characterize human thought processes. Such inherent limitations highlight the need for cautious deployment and continuous evaluation when integrating LLMs into critical decision-making contexts.

On the other side of the coin, LLMs can also mimic System-2-like reasoning, albeit in a limited manner. By harnessing specific prompting techniques, users can access outputs that exhibit slower, more methodical responses. This controlled interaction can elicit more nuanced analyses, opening the door to applications where careful consideration and thorough reasoning are paramount. However, it is essential to note that this reasoning is not equivalently reflective of human cognition. The LLM’s analytical capabilities stem from learned patterns rather than genuine understanding, which can result in occasional lapses in logical coherence or factual accuracy.

Crucially, the “cognitive” biases seen in LLMs often do not stem from innate understanding but rather from systemic patterns identified during training. This reality underscores a significant distinction between human cognition and machine learning. While human biases may originate from experiential and psychological roots, LLM biases can perpetuate and amplify existing societal prejudices, potentially resulting in outputs that could reinforce harmful stereotypes or inaccuracies.

Another limitation of LLMs involves the phenomenon of “hallucinations.” This term refers to situations where LLMs generate information that stylistically resembles factual content but is entirely fabricated or misleading. These hallucinations can pose substantial risks, particularly in high-stakes environments such as healthcare, legal settings, or financial decision-making. The persistence of hallucinations exemplifies why careful oversight and validation measures are essential when utilizing LLMs to enhance decision-making frameworks.

Despite these challenges, the integration of LLMs into human decision-making processes holds significant promise. By leveraging the strengths of these models while mitigating their weaknesses, users can unlock potential enhancements in productivity, efficiency, and informed choice. Responsible and ethical deployment of LLMs can pave the way for valuable decision-support systems that augment human capabilities rather than replace them.

To harness the benefits of LLMs, researchers and practitioners alike must adopt a proactive approach in addressing potential biases and inaccuracies. This includes establishing clear guidelines for data curation, scrutinizing the training datasets for inherent biases, and implementing robust validation procedures for LLM outputs. Emphasizing collaboration between human intuition and machine-generated insights can foster a more holistic decision-making environment, ideally leading to more equitable and effective outcomes.

The recommendations for responsible LLM use extend beyond mere technical measures; they also involve fostering a culture of awareness and critical thinking among users. Encouraging users to question the outputs of LLMs, understand their limitations, and consider multiple perspectives is crucial in cultivating an informed society. This approach not only enhances decision-making efficacy but also promotes a safe space for integrating innovative technologies responsibly.

In conclusion, the intersection of LLMs and decision-making reflects a complex interplay between advanced technology and human cognition. Dual-process theory provides a valuable framework for analyzing the behavior of LLMs, revealing their dual tendencies toward both heuristic-driven and analytical-like reasoning. While LLMs demonstrate formidable capabilities in many scenarios, stakeholders must remain cognizant of their limitations and biases, ensuring that these systems augment rather than undermine human decision-making. Therefore, adopting a strategic, responsible approach toward LLM deployment will be pivotal in realizing their full potential as effective decision-support systems.

Lastly, the ongoing exploration of LLMs’ role in influencing decisions opens up avenues for future research, particularly in understanding how these models might evolve and integrate further into human processes. The journey of integrating artificial intelligence into decision-making is just beginning, and continuous dialogue, scrutiny, and innovation will ensure that these powerful tools contribute positively to society.

Subject of Research: Decision-Making in Large Language Models

Article Title: Dual-Process Theory and Decision-Making in Large Language Models

Article References:

Brady, O., Nulty, P., Zhang, L. et al. Dual-process theory and decision-making in large language models. Nat Rev Psychol (2025). https://doi.org/10.1038/s44159-025-00506-1

Image Credits: AI Generated

DOI: 10.1038/s44159-025-00506-1

Keywords: Large Language Models, Decision-Making, Dual-Process Theory, Cognitive Biases, Hallucinations, Responsible AI